3.1 All but War Is Simulation

Conflict simulations have been in use since violence between humans first metastasized into war. The games chess and Go, for example, were both inspired by military simulations. However, the first victory in battle thought to be related to extensive simulation training was linked to the strategy board game Kriegsspiel—from the German meaning “war-game”—developed in 1811 for the new officer class of the Prussian Army.

Kriegsspiel does not exist to generate usable data but to train generals in a variety of hypothetical situations, enabling them to better analyze, interpret, and make decisions during battle. Although the game was offered for sale in France and Russia, nobody outside Germany took much interest until the Prussian Army’s decisive victory in the Franco-Prussian War (1870–71), despite having no clear advantage in terms of munitions, soldiers, or experience. Instead, it was their cadre of officers that were praised: a generation of strategists raised with tabular calculations on a synthetic battlefield.14

In Kriegsspiel, outcomes are decided using calculation tables. Various mathematical procedures have been devised to predict everything from the effect of morale on outcomes to supply chain management. Two of the best-known procedures are Lanchester’s Linear Law and Square Law formulated by the British engineer Frederick Lanchester in 1914. The Square Law is often known as “the attrition model” and suggests that the power of a modern military force is proportional to the square of its number of units (the Linear Law meanwhile was applied to pre-industrial combat using spears rather than long-range weapons). A later update was the Salvo Model, developed to represent naval combat as a series of salvos of missiles exchanged between opposing fleets.15

Throughout the 1900s, a greater number of variables—political, environmental, energetic—were incorporated into military simulations. In the United States, simulation practice and technology became enmeshed with state security and geopolitics, evolving in tandem with the development of a material substrate on which the necessary computations for predicting outcomes would be run: transistors and semiconductors.16

A permanent wargaming facility was created at the Pentagon in 1954 under the motto “All but war is simulation,” attributed to seventeenth-century samurai-philosopher Miyamoto Musashi who emphasized mental preparation and visualization in advance of combat. The American general Douglas MacArthur added his own spin, writing that “in no other profession are the penalties for employing untrained personnel so appalling or so irrevocable as in the military.”17

The longest-standing pact between services of the US military is a 1950 agreement between the army and the navy about sharing training devices. In 1966, the agencies responsible for training soldiers moved to Orlando, Florida, where they introduced a synthetic flight training system for the Bell UH1 “Huey” helicopter—the workhorse of the air cavalry during the Vietnam War.18 Around the same time the Naval Training Device Center introduced the multiple integrated laser engagement system (MILES), which used lasers and blank cartridges to enhance the realism of training and remained in constant use until the 2020s. The technology was not limited to the military, however. A similar system was released as a set of phaser guns by games manufacturer Milton Bradley to coincide with the release of Star Trek: The Motion Picture (1979), creating the market for recreational laser tag.

3.2 America’s Army and Unreal Engine

The production of military simulations gradually opened up to civilian researchers and private institutions. RAND Corporation, Harvard University, the National Defense University, and Massachusetts Institute of Technology each ran simulations for the Pentagon, which included modeling the Vietnam War, the fall of the Shah of Iran, and tensions between India, Pakistan, and China. In 1998, the US Army established a contract with the University of Southern California’s Institute for Creative Technologies in Los Angeles. The early success of RAND Corporation was connected to Hollywood by way of Herman Kahn’s “screenplay scenarios” which described how a nuclear exchange between the great powers might play out in qualitative as well as quantitative terms. The USC contract signified a desire to share technology between the entertainment world and the military, a pact that would be continued in the games industry.

The US Army and software company Epic Games worked together for eight years to produce the second generation of Epic Games’s graphics engine Unreal. The engine was launched along with the game it was designed to build: America’s Army (2002), a first-person tactical shooting game that provided a virtual experience of soldier training and deployment, and was designed at the Modeling Virtual Environments and Simulation Institute at the Naval Postgraduate School in Monterey, California.

The game was commissioned by Colonel Casey Wardynski , director of the Office of Economic and Manpower Analysis at West Point, New York, who told the New York Times its original aim was to cut one of the army’s biggest expenses—recruitment: “If the game draws 300 to 400 recruits, it will have been worth the cost.”

A 2008 study from the Massachusetts Institute of Technology found that “30 percent of all Americans ages 16 to 24 [years] had a more positive impression of the Army because of the game” and, even more surprisingly, “the game had more impact on recruits than all other forms of Army advertising combined.”19 Counter to expectations, the game was a success with civilians, too, described as the “biggest surprise of the year” by IGN.20 It was the first overt use of a video game as a recruitment and propaganda tool.21

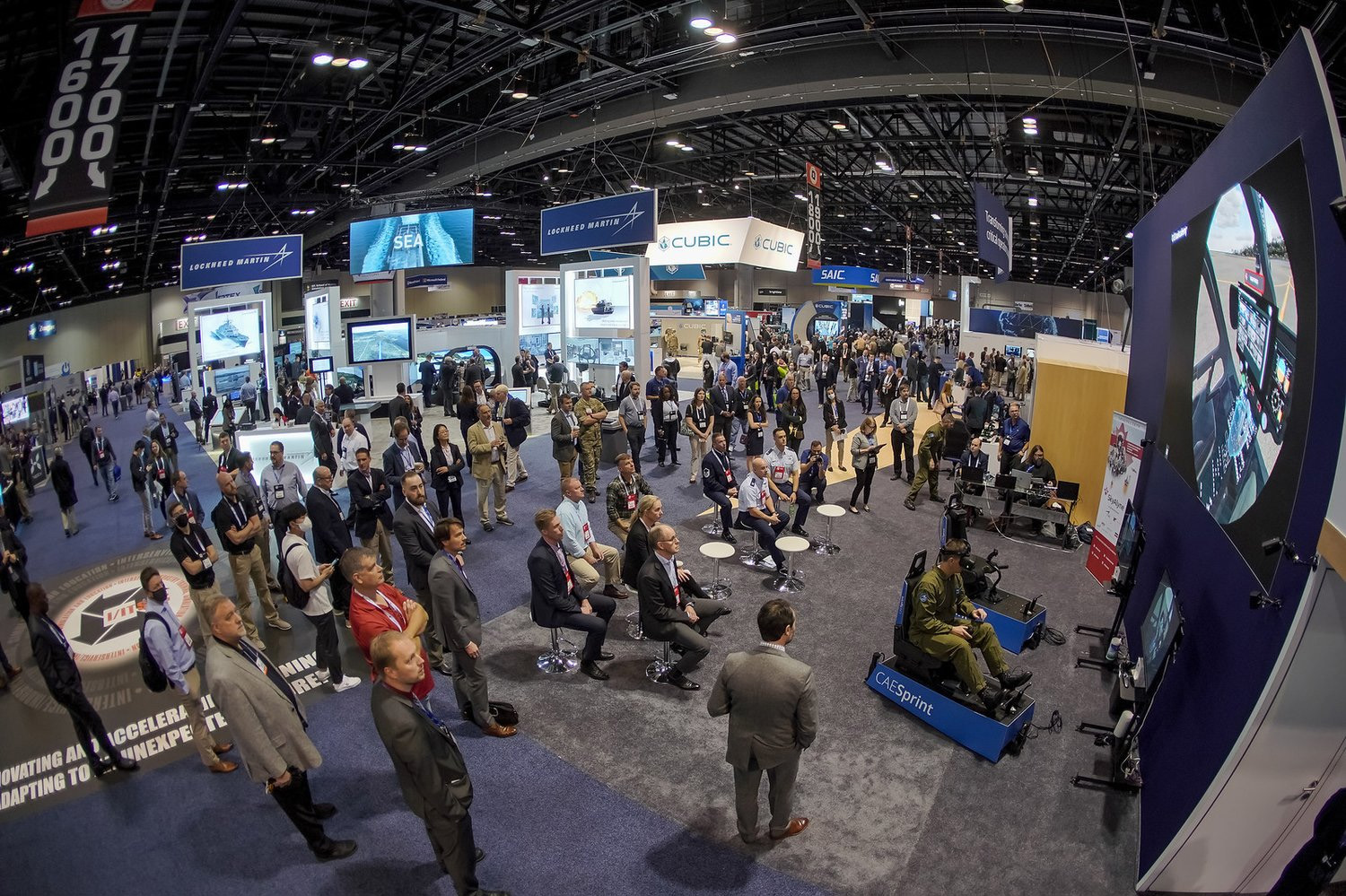

After the invasions of Iraq and Afghanistan, training became more field-based. The motto became “train as you fight,” emphasizing the need to model complex urban and rural terrain to wage war against non-conventional armies. In 2007, the various commercial, military, and academic partners in Florida formally integrated as Team Orlando, presiding over an industry then worth $4.8 billion. Orlando became home to the National Center for Simulation. It is also the home of the Interservice/Industry Training, Simulation and Education Conference (I/ITSEC), probably the largest simulation technology convention, where America advertises its innovations to the world with other NATO members as its primary customers.

In 2010, the US Air Force Research Laboratory built what was then the thirty-third largest supercomputer in the world by combining 1,760 Sony PlayStation 3s. The Condor Cluster was used for radar enhancement, pattern recognition, satellite imagery processing, and artificial intelligence research, and was the fastest interactive computer in the entire US Department of Defense for a time.

No combination of later consoles was able to rival the world’s fastest supercomputers, yet each progressive generation of video game consoles has forced innovation in the field. They did this consistently through huge economies of scale and manufacturing subsidies offered by console producers assuming software purchases and percentages of in-game economies would make up for early investment. Yet it was the arms race between console makers and chip designers, fueled by the public’s willingness to pay for improved graphics, that led to the improvements necessary to overcome various bottlenecks and dead ends across the military, science, and industry. This was the era of the GPU: a unique computational architecture that began a steep upward curve for simulation in all areas of life.

3.3 Origins of the GPU

One of the first personal computers (PCs) to escape the laboratory and enter into mass production was the Datapoint 2200, which integrated Intel’s 8008 central processing unit (CPU). Intel’s previous logic chip, the 4004 CPU was the world’s first commercially produced microprocessor, commissioned by the Japanese office devices firm Busicom for use in electronic calculators.22 Although the idea of a chip explicitly dedicated to computer graphics was popularized by the launch of NVIDIA’s GeForce 256 in 1999—with the term itself reaching mainstream consciousness thanks to its use in relation to the original PlayStation five years before that—NVIDIA’s frequent claim that theirs was the first specialized graphics accelerator is technically inaccurate.

The first playable computer games, Bertie the Brain (1950) , Tennis for Two (1958) , and Space War (1962) , ran on systems that were custom-built. In the same way, arcade machine games such as Computer Space (1971) , Pong (1972), and later Space Invaders (1978) housed their hardware in tall fiberglass cabinets and used video controllers hard-coded to output visuals for each specific game.

Like a gallery in any arcade, this was a demonstration of what was and could be possible, even if private ownership of such devices was beyond the reach of most households. The first home games consoles—the Magnavox Odyssey (1972) and Atari 2600 (1977)—appeared at a time when computers were still extremely expensive. Although the history gets a little difficult to parse, no home console seems to have had a purpose-built programmable graphics card until Nintendo’s Famicom in 1983.23

A GPU is a computer chip with a variety of hardware-accelerated 3D rendering features, but in the early years the term GPU was more a change in marketing than any real difference from existing CPUs. The Commodore Amiga, released to the mass market in 1986, offloaded image processing to the processor TMS34010, developed by Texas Instruments in 1986. But it wasn’t until intuitive graphical user interfaces such as Windows became popular that what we think of as GPUs today began to speciate and develop on a separate path.

That same year the Canadian company Array Technologies, Inc. (ATI) launched its Wonder series of video card add-ons for IBM PCs, which supported multiple displays and enabled users to switch resolutions. Yet, they still relied on the system’s CPU for many core processing tasks. In the early 1990s, application programming interfaces like OpenGL (1992) and DirectX (1995) enabled programmers to write code for different graphics adapters, creating with it a standard software platform that could be used by games studios. CD-ROMs enabled greater data storage, and two games, Virtua Racing (1992) and Doom (1993), were among the standouts that pioneered 3D (or at least 2.5D) graphics for PC gamers, much as Sonic the Hedgehog (1991) and Street Fighter 2 (1991) had done on the Sega Genesis and Super Nintendo did with the use of parallax scrolling, with a much richer color pallet and light-related techniques such as shadows.

By the time the original PlayStation was released in 1995, consumer PC gaming was going through a slump with graphics cards such as the S3 ViRGE jokingly referred to by gamers as a “graphics decelerator.” The runaway success of Sony’s console lit a fire that spread through the hardware market. The 3dfx Voodoo by San Jose’s 3dfx Interactive, Inc., released in 1996, was the first to support a multi-GPU setup, a signal that maximizing resolution and frame rate by any means necessary would become holy grails for the industry.

3.4 GPUs in Ascendance

In 1999 NVIDIA released the first GPU in its GeForce series for domestic PC gaming. Geforce 256 was capable of processing complex visuals that included advanced lighting and rendering of individual pixels, though it was only with the release of GeForce 2 (NV15) in 2000 that game developers were able to catch up and make use of what NVIDIA co-founder Jensen Huang had hailed “the single largest chip ever built.”

In 2002, 3dfx Interactive, Inc., went bankrupt after attempting the notoriously difficult process of fabricating its own chips, and was acquired by the “fabless” NVIDIA, leaving ATI and NVIDIA as the two most prominent companies in the field. To differentiate their product from that of their southern rival, Toronto-based ATI used the term “visual processing unit” and launched its rival to GeForce, the Radeon series , in 2000.

The launch of PlayStation 2 in 2000 and the Xbox in 2001 introduced standard definition, a resolution of 480 pixels, to home consoles, but the febrile competition between NVIDIA and ATI (who were acquired by Advanced Micro Devices, Inc., the creators of Athlon and Opteron processors, for around $5.4 billion in 2006) meant that by the end of the first decade of the 2000s, PC gaming had comfortably dwarfed the next generation of consoles. By this point they were regularly sustaining 1,080 pixels, high-definition resolution at frame rates above 60 frames per second.

In 2006, NVIDIA released GeForce 8800GTX: an immense, energy-hungry card with 681 million transistors, a three-way linkable interface for the most ambitious gamers, and a core clock rate of 575 megahertz, which was faster than the CPUs it was commonly paired with.24 A crossover moment had arrived.

After the exponential growth of resolution and frame rates in the 2010s, GPU specs began to plateau. The jump from a resolution of 1,080 to 4,000 pixels, for instance, is a 400 percent leap resulting in computational and energetic demands that were unjustifiable. Instead, in the 2010s, the same companies worked on software enhancements for the way lighting dynamics are calculated. They used deep learning to upscale resolution, improving visual output without requiring additional power or a quicker frame rate, pushing chip architecture towards diversification once again.25

The most popular NVIDIA cards of the early 2020s were 2021’s RTX3090 , marketed as the world’s first 8,000-pixel GPU, and 2023’s RTX4090. They each contain a distinct core dedicated to ray tracing and another for deep-learning super sampling that uses AI to upscale resolution. Advances in machine learning throughout the 2010s enabled chips that can predict what we want to see, which were in turn made possible by targeted AI research using GPUs originally designed for gaming. Generative AI and new hardware that was capable of running increasingly large models spread in tandem, transforming everything from how airplanes and ocean liners are designed, to scientific simulations of fusion reactions and cosmological models that demonstrate how galaxies “breathe in” gasses to create stars then let them fall away after those stars die. The ability to model complex, dynamic environments opened new possibilities for scientific knowledge but also raised the bar for training soldiers in the theatres of war.26

3.5 Virtual Battle Station

The Czech games studio Bohemia Interactive was founded by brothers Marek and Ondřej Španěl under its original name, Suma, in 1985. The duo had developed an obsession with computers after Marek bought a Texas Instruments TI-99/4A in the early 1980s and released a hovercraft-based military simulator called Gravon: Real Virtuality in 1995.

After various dead ends, the studio found success with Operation Flashpoint: Cold War Crisis in 2001, a tactical shooting game set on a fictional archipelago whose islands were divided between the Soviet Union and the United States. The game sought to outdo competitors in terms of realism by 3D-scanning guns and even modeling individual eyeballs. It caught the attention of the US Army who began using a modded version of the game called DARWARS Ambush! to train soldiers—supported by US Defense Advanced Research Projects Agency funding.

Bohemia would later set up a special division called Bohemia Interactive Simulations (BISim) to cater to military training and simulation exclusively. In 2001 the studio signed an exclusive contract with the US Marines to develop the first edition of their Virtual Battle Space (VBS) series. Where a game like Call of Duty or Counterstrike might hone a player’s motor skills and reaction times, VBS incorporated the latest ordnance, bringing game controls into alignment with existing weaponry. Deployed in enormous battle simulation centers in the US and overseas, soldiers sat side by side in monitor-filled rooms to practice the techniques, procedures, and outcomes they might encounter in the field. Much like Kriegsspiel it was an exercise in collective cognition enabled by simulation, establishing useful reference frames and teaching groups of humans how to think.

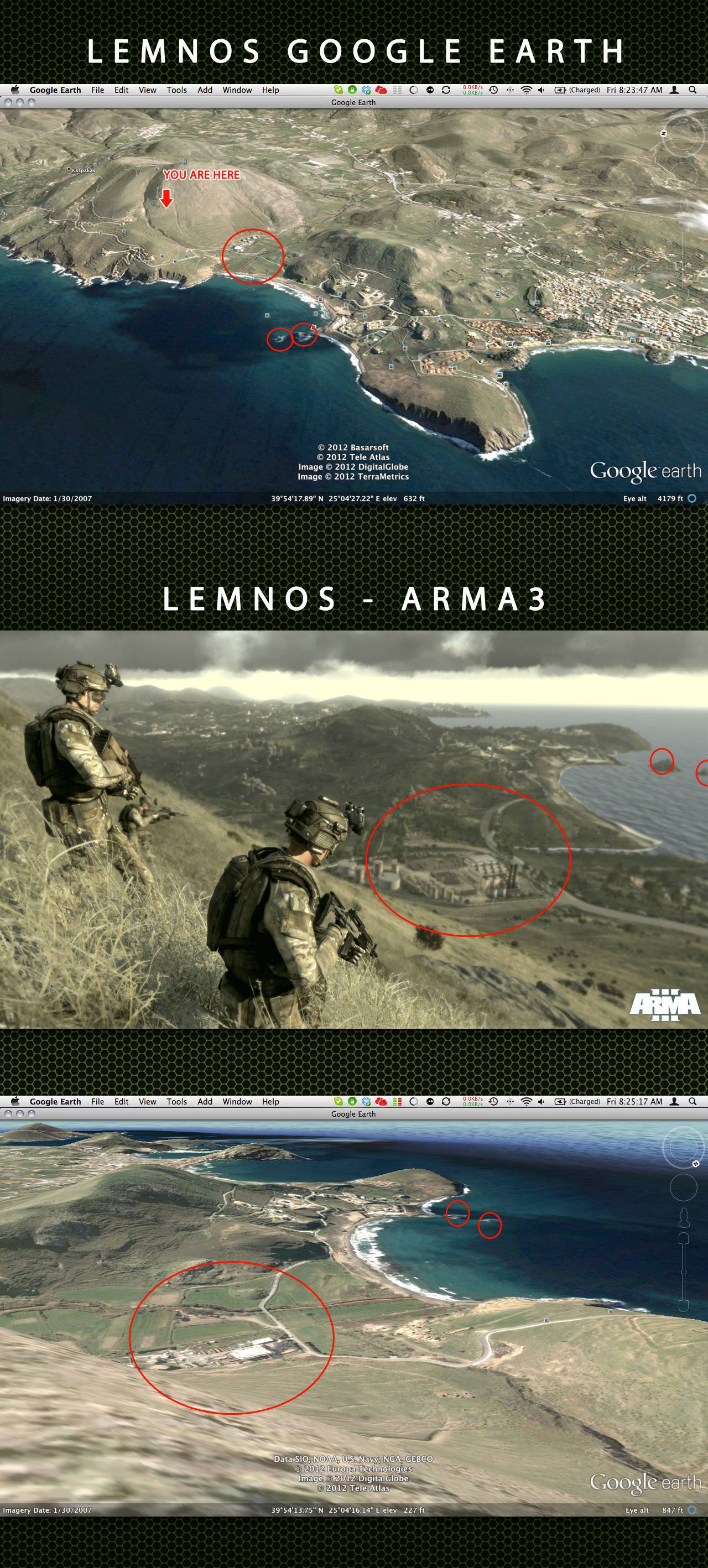

VBS3 became the entire army’s “game for training” when it was released in 2014. Meanwhile, Bohemia Interactive continued to create games for civilian use. In 2012, the company fell into financial difficulties after two of the studio’s staff, Martin Pezlar and Ivan Buchta, were arrested on suspicion of espionage and held for 129 days on the Greek island of Lemnos after they were caught taking photographs of military installations. If convicted, Pezlar and Buchta faced a twenty-year sentence, ruining morale at Bohemia and overshadowing the launch of Carrier Command: Gaea Mission, the studio’s latest game. In the end, then Czech president Václav Klaus stepped in and Bohemia encouraged gamers to petition the Greek government, both of which contributed to their eventual release.

3.6 One World Terrain

After the occupations of Iraq and Afghanistan, the US Army, whose capital injections had rescued Bohemia Interactive more than once, stressed the need to improve close combat training on the front line—which accounted for just 4 percent of all personnel but where 90 percent of total casualties occurred. The US’s 2018 National Defense Strategy included a commitment “to provide a cognitive, collective, multi-echelon training and mission rehearsal capability” and combine “virtual, constructive and gaming training environments into a single Synthetic Training Environment (STE) .”27 The commitment was both inspired by and an accelerator of a game studio culture increasingly devoted to generating large open worlds that more fully embraced the firepower provided by banks of next generation GPUs.

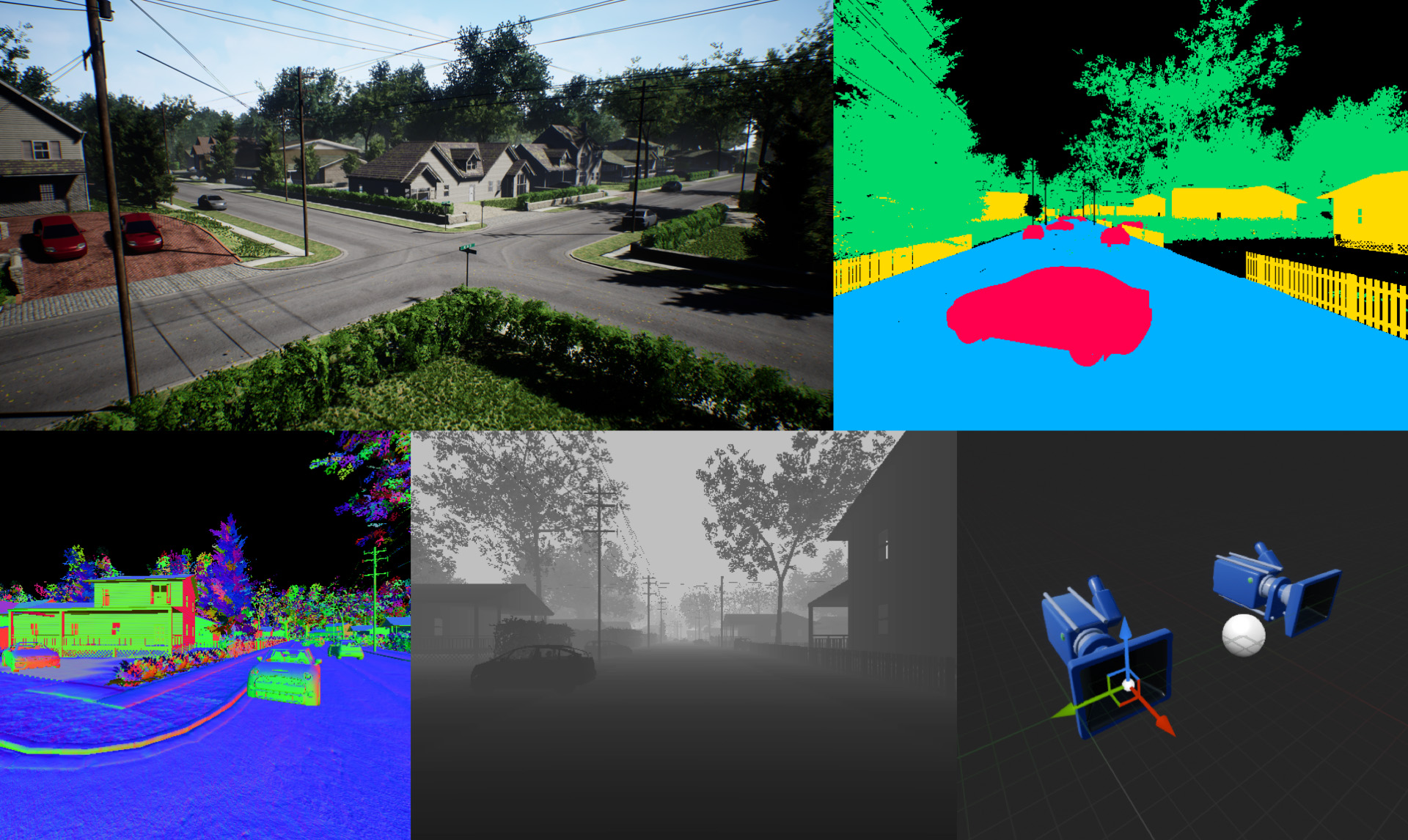

Bohemia set to work on a specific aspect of the mission known as One World Terrain, which aspired to create “a realistic, common, accessible and automated 3D terrain data set for simulation and, potentially, mission command and intelligence systems, to conduct collective training, mission rehearsal and execution” that could leverage “game-streaming technologies enabled by new advances in fiber optic and 5G wireless networks.”28 The result was VBS4, a step change in the series described by Bohemia as “a whole-earth virtual desktop trainer and simulation host that allows you to create and run any imaginable military training scenario,”29 released to the United States and allied military customers at the start of 2020.

VBS4 is more of a game engine than a game. It was specifically designed for military simulation and training. It simulates the whole planet, opening with a giant blue-green orb that can be manipulated from space and altered down to individual patches of grass. It provides users a relatively straightforward interface with which to build battle spaces and to integrate terrain data “from any conceivable source.” In a 2019 interview with PCMag, Australian Army veteran and chief commercial officer of BISim, Peter Morrison, described how the system incorporates drone-collected 3D data: “The military can fly a drone at a low level, and using photogrammetry, a highly realistic 3D model of a huge area can be constructed. Our users can quickly pull that data into the game and use it as the basis of training.”30

Trainees inhabit avatars in the simulation that they access either at their desktop or using devices such as headsets, vehicle cockpit simulators, advanced weapons simulators, and parachute harnesses. Senior military figures use mission planning tools and deploy enemy soldiers to test and assess tactics—much as the Prussian Army had done while playing Kriegsspiel 300 years before. What was so obviously different was the scale and resolution of these operations: from a handful of calculations to a simulation that aimed to stand in for the globe and to grant users video game-style controls over it.

In 2022, BISim was acquired by British arms and aerospace manufacturer BAE Systems for $200 million. In the press release accompanying the acquisition,31 BAE Systems noted that the global spend on military training simulation environments and related services was expected to surpass $11 billion each year. VBS4 is accessible through the cloud, enabling troops on deployment to simulate conditions in the next valley over by inputting tomorrow’s weather.

As with previous innovations, like MILES, no sooner is a technology unveiled it quickly diffuses throughout the economy. The overlap between physics engines , collision detection, scene graphing, and network structure developed in tandem with (and cannot be separated from) revelations from the field of artificial intelligence. Game engines built to engineer open worlds and graphics accelerators refined by fierce industrial competition led to significant breakthroughs that had no obvious connection to defense—in manufacturing, biotech, and training AIs.

By the start of the 2020s, the very same software platforms were being used to train autonomous vehicles, to plan cities and update climate prediction methodologies that hadn’t substantially changed since the 1970s. Software simulations that emerged from military applications became integral to understanding and predicting the weather, both of which produced models of agents and environments later abstracted and universalized as the Infinity Mirror grew. It is rare for a technology to find its ultimate application on the first go. Simulations are no exception.