4.1 Apollo 13 and Digital Twins

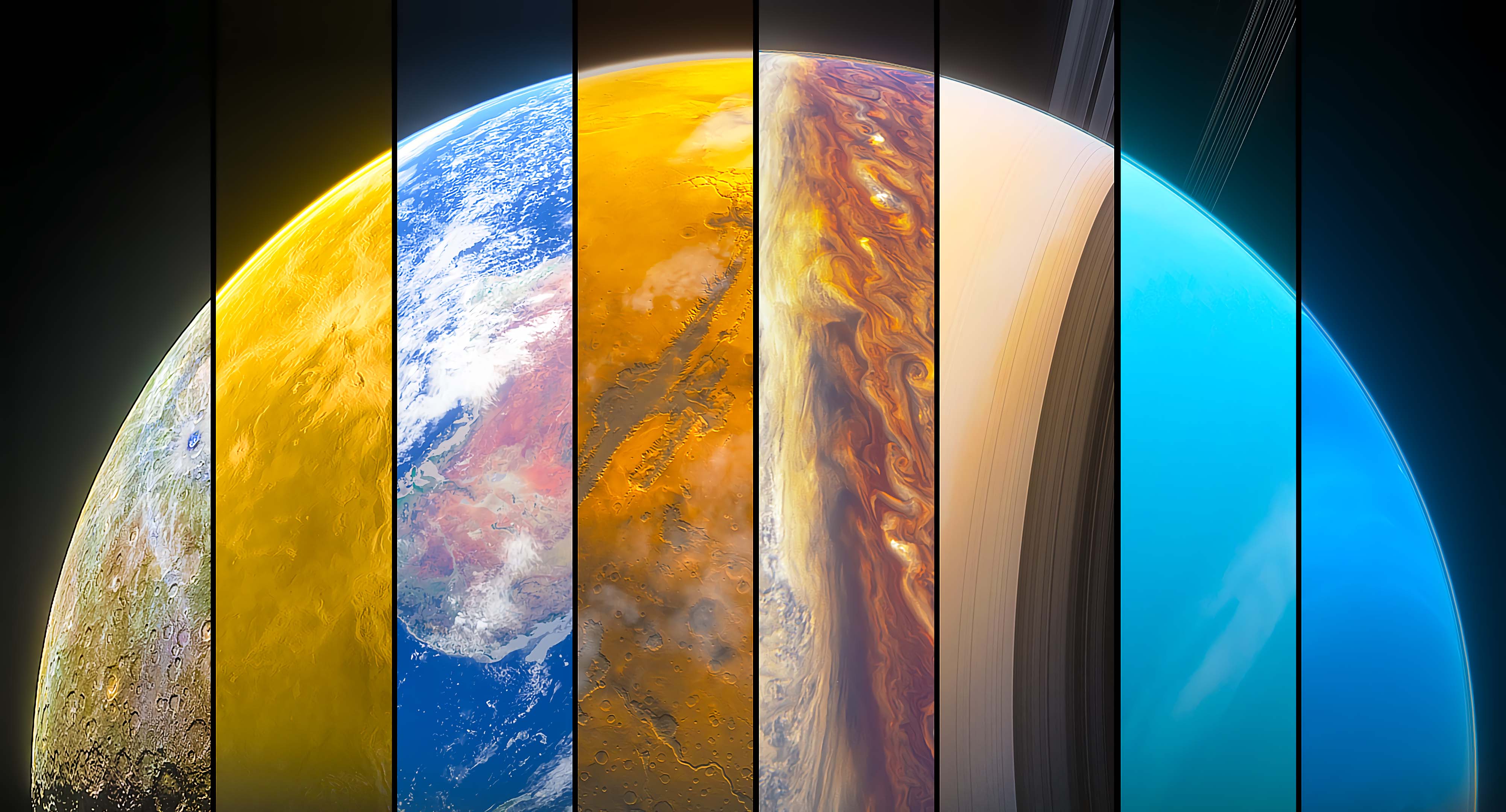

More than fifty years after Stewart Brand petitioned NASA to see a photograph of Earth from space, a handful of new projects were conceived to create planetary digital twins. Brand’s hope was that the photograph would raise global consciousness and direct it toward a threatened biosphere. While many claim he was successful, Brand himself expressed ambivalence. Half a century later, the fanfare around complex, dynamic simulations of the whole Earth suggested these could succeed in ways a static image could not.

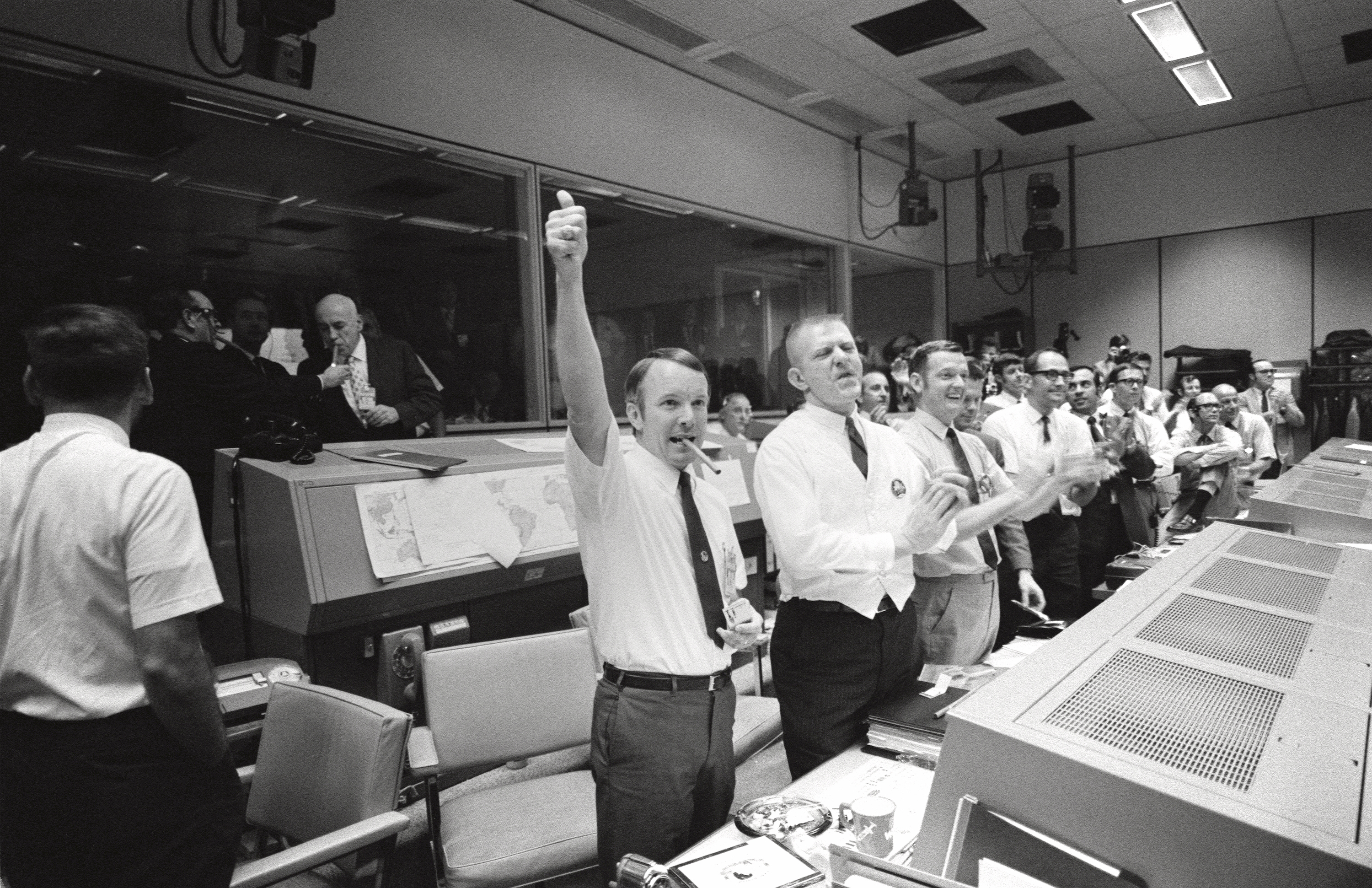

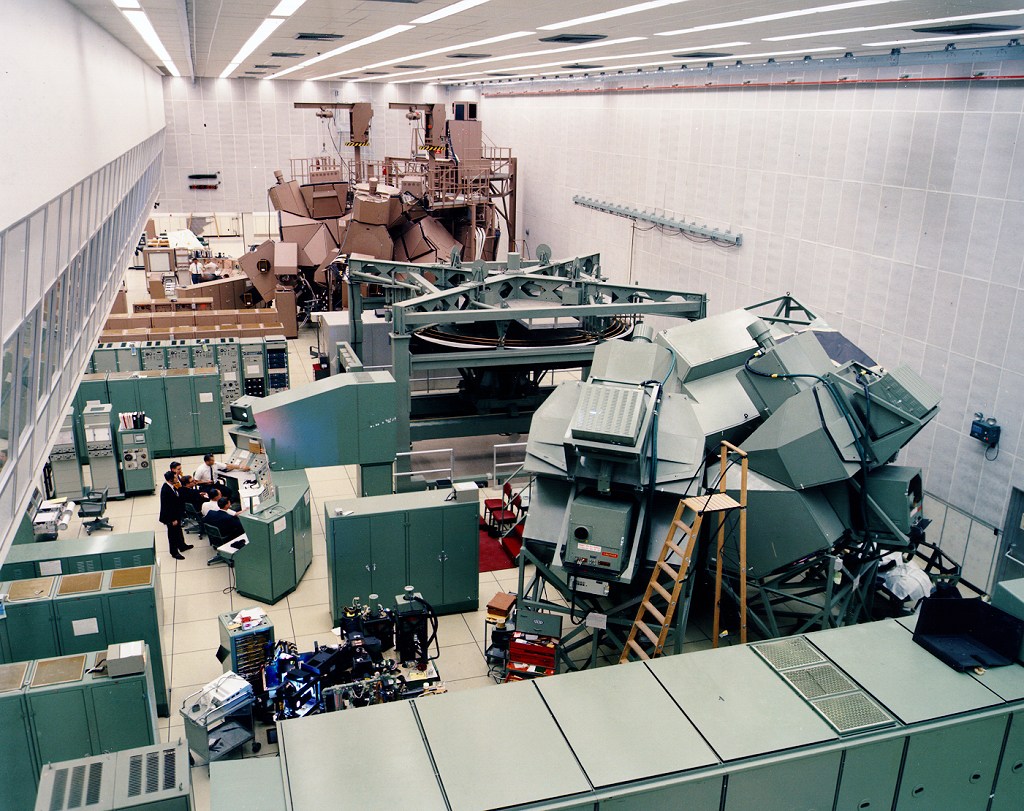

The term digital twin was coined by NASA in 2010, but the organization has retrofitted an origin story dating back to the 1950s and 1960s, when replicas of early space capsules were used for training and during space flights, mirroring change as they were communicated—with a slight delay—from astronauts in orbit. When the oxygen tank on Apollo 13’s service module blew out in April 1970, engineers in Houston, Texas, worked across fifteen separate simulation devices to figure out a way to save the crew. Ultimately, they found a way to reconfigure the lunar lander so that its oxygen supply would last long enough to return three humans to Earth.

Digital twins are bespoke, dynamically evolving systems composed of three parts: a physical object or system, a computational model of that object or system, and an equally important communication channel to connect them. The physical and computational models are recursive: input to one alters the other. In some cases, digital twins capture the underlying physics of existing systems. In others, the twin might be based on a prototype, meaning it can provide feedback as the product is refined. Conversely, the twin itself can serve as a prototype before anything concrete is built.

Digital twins proved highly useful in manufacturing. Aircraft engines, trains, offshore oil platforms , cars, freight vessels and wind turbines could all be designed and tested digitally before being put into production, and their ongoing performance could be monitored and understood. Not only the end product but also the assembly line on which it was produced was modeled. In this way, should an issue emerge after the product is released, it becomes much easier to use artificial intelligence to assess the many possible remedies—product recall, process alteration, use of new materials, and so forth—to minimize response times and improve cost-efficiency and safety.

As the worlds of augmented and virtual realities expanded, the interplay between non-digital objects and their virtual counterparts narrowed—a transition enabled by the ever-increasing number of sensors active in the world. A clear example is electric vehicles, which contain advanced telemetrics as well as highly optimized production lines, analyzed in detail in digital form by manufacturers.32 The dynamic of recursive feedback in industrial production illustrates how the increased complexity and application of simulation does not mean the real world is ignored but rather transforms and is transformed by it.

4.2 Unreal Ground Truth

The game studio Blackshark was known for solving technical challenges in open world gaming. In 2016 they were approached by Microsoft to work on its famous Flight Simulator series. Microsoft explained that they had a high-resolution surface image of the entire planet and wanted to make it 3D. At the time, Google Earth’s 3D coverage was around 10–20 percent of the total world map, painstakingly produced using roving cars and light detection and ranging (LiDAR).

Blackshark teamed up with Maxar, the satellite technology company who provided much of the satellite imagery used by Google Maps (and who became a household name after their images of a Russian military build-up at the Ukrainian border in February 2022). More recently, they also usurped BISim as the provider of the US Army’s One World Terrain. Together, they took a planet-sized scroll of two-dimensional images and matched them to data parameters such as local population density, regional architectural styles, size of sidewalks, and the angle of shadows beside structures used to correct their angles so they would appear upright. They then applied geotypical skins—best guesses produced with machine learning—and kept running the model until they achieved more than 90 percent accuracy.

The outcome was known as Synth 3D: a model of 1.5 billion buildings and 30 million square kilometers of vegetation in 3D, available for use in building augmented/virtual reality applications, predictive AI deployed at a planetary scale. It allowed developers to port familiar game mechanics into ultra-real settings, allowing players to drive anywhere, to understand the impact of natural disasters, to take virtual vacations, or to scout locations for movies. The system was used by Walmart to experiment with drone delivery—though when the company tested its findings in reality, a dog showed up that hadn’t been included in the simulation and took out the drone. Dogs were included in the follow-up version.

A 2019 paper from the Karlsruhe Institute of Technology in Germany launched a plugin “for synthetic test data generation utilizing the Unreal Engine” known as UnrealGT, used to create generic data sets for applications such as unmanned aerial vehicles and autonomous driving.33 For the most part, autonomous vehicles are predominantly trained in synthetic environments created using 3D engines like Unreal or NVIDIA’s Omniverse—the software Amazon uses to create real-time models of its warehouses. Synth 3D, in turn, pays special attention to synthetic airport reconstructions that allow airline companies to test and validate airplane sensors for autonomous landing.

4.3 Extreme Silicon Computing

Every year more sensors, cameras, and Internet of Things chips are integrated into the world in the form of satellites, ground station sensors, ocean buoys, domestic devices, or weather balloons. In 2023, the Federal Agency for Cartography and Geodesy announced it was working on a “digital twin of Germany.”34 The system produces high-resolution geodata gathered using Geiger-mode LiDAR and single photon LiDAR enhanced by satellite and real-time sensor updates facilitated by AI. The stated aim was to offer “a realistic mirror image” of the nation for use in forest condition monitoring, precision agriculture, spatial planning, and adaptation to extreme weather.35

By this point, many predicted that within five to ten years weather forecasts would be continuous, hyperlocal, and 100 percent accurate. Their rationale was complementary advances in high-performance computing combined with AI training and inference: a symbiotic co-evolution of hardware and software developing just as new, exascale-class supercomputers became able to simulate precisely the sort of climate change-related weather effects pressuring biological, technological, and political life to adapt.36

Until the early 2020s, climate models were able to resolve atmospheric conditions within a ground resolution of 10 to 100 kilometers. Thomas Schulthess of ETH Zurich and Bjorn Stevens of the Max Planck Institute for Meteorology have argued that this represented “incremental” gains, enabled by Moore’s Law, making it possible to simulate features like cyclones and gyres. However, resolutions below 10 kilometers, and particularly below 1 kilometers, they argue, would be a different proposition entirely—a “great leap” only possible in the era of high-performance computing assisted by AI inference. Suddenly it would be possible to “move from crude parametric presentations to an explicit, physics based, description of essential processes.”37

One example was the Simple Cloud Resolving E3SM Atmosphere Model (or SCREAM) that ran on the Frontier supercomputing system at Oak Ridge National Laboratory, pushing the grid size of a full Earth climate model down to a resolution of 3.25 kilometers. During a ten-day window in 2023 using 9,000 of the Frontier system’s 9,472 nodes, SCREAM was able to simulate a year per wall-clock hour. Where current Earth system models approximate cloud formation and behavior, models like SCREAM drastically reduce major systematic errors in precipitation prediction. Although the prospect of including ocean, ice, or industrial processes in the same model presents an alarming computational burden, it didn’t stop others from making alternate plans.

4.4 Earth-2 and Destin-E

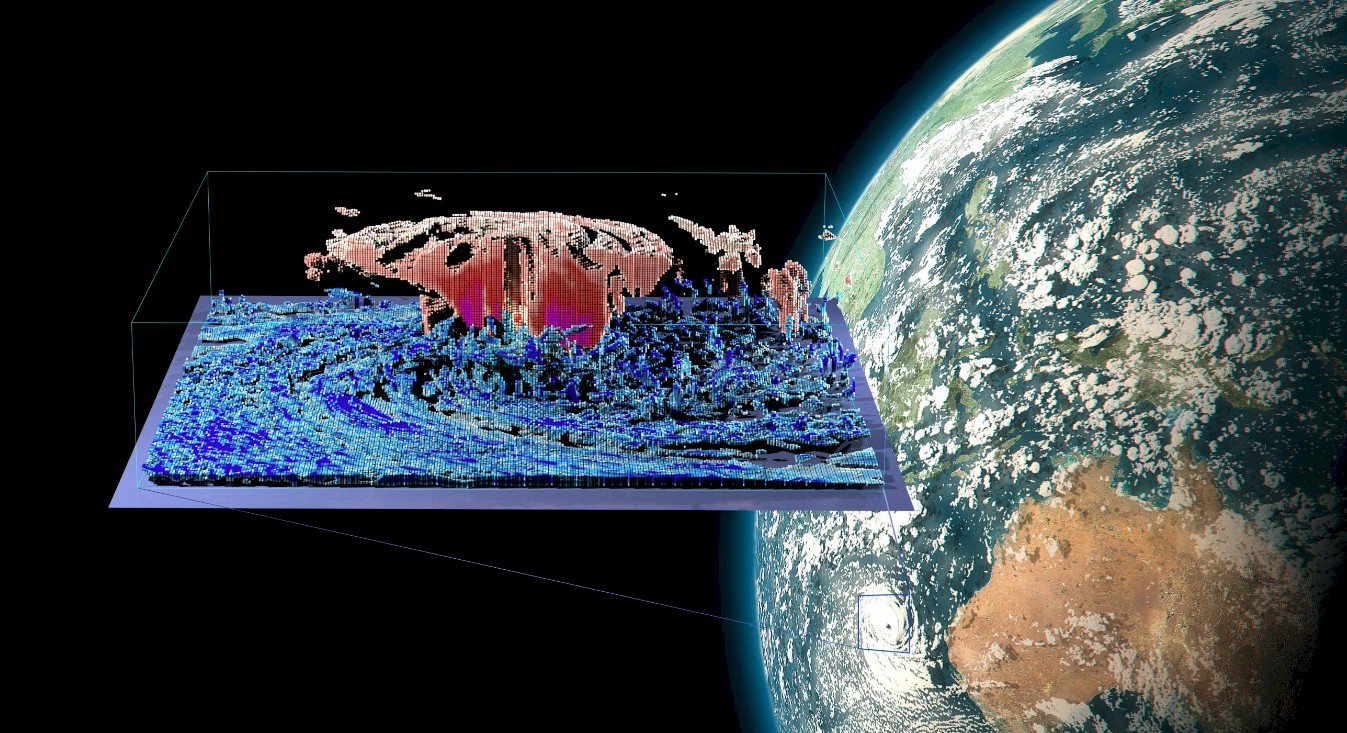

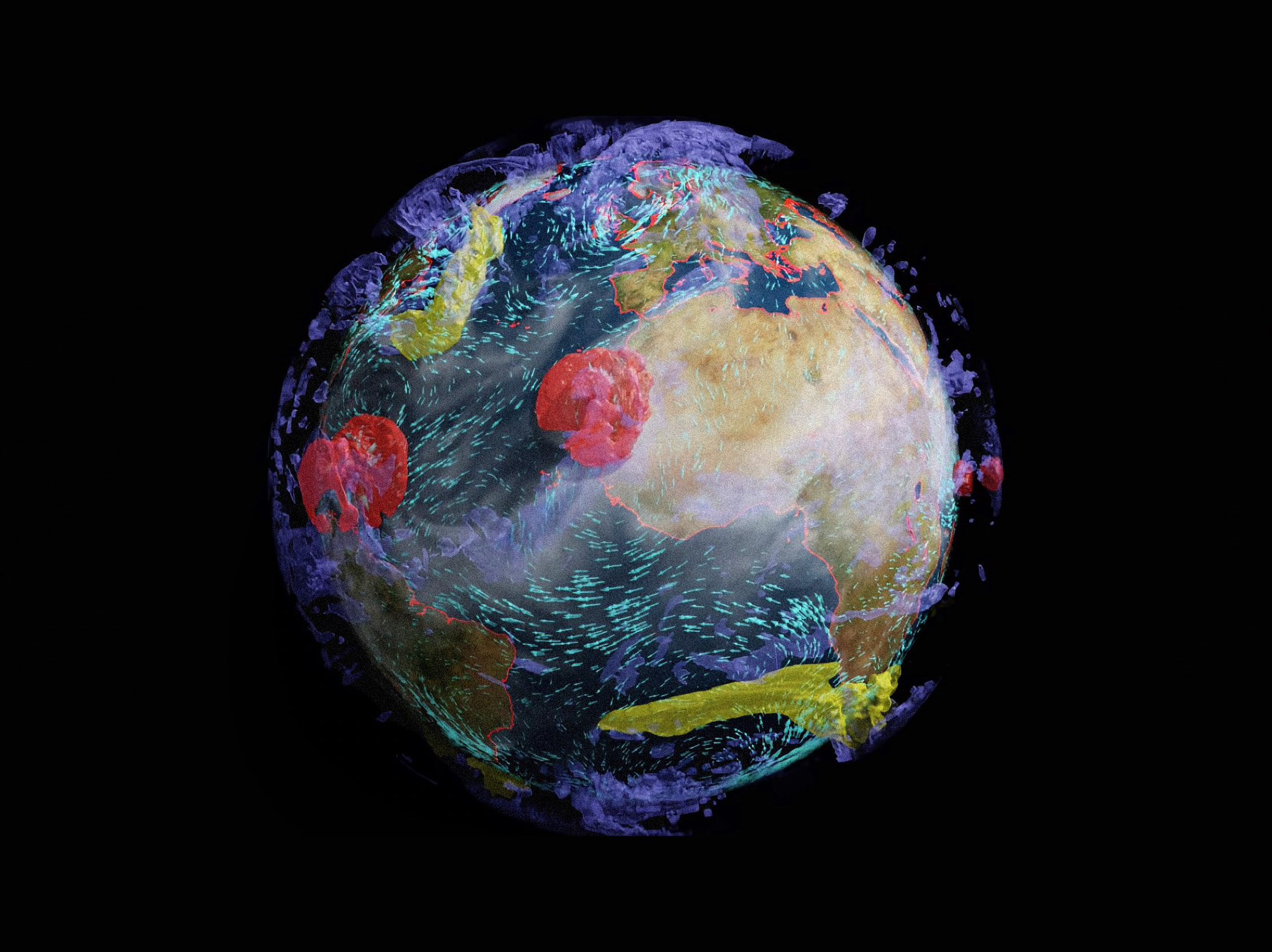

In 2021, NVIDIA co-founder Jensen Huang argued that the next frontier in gaming would be the inclusion of the “laws of particle physics, of gravity, of electromagnetism, of electromagnetic waves, [including] light and radio waves . . . of pressure and sound.”38 While gaming was NVIDIA’s first major gambit, their sights were now focused elsewhere. That same year the company unveiled a supercomputing project known as Earth-2. During the announcement Huang said “we must know our future today—see it and feel it—so we can act with urgency. To make our future a reality today, simulation is the answer .”39

A series of demonstrations were published in 2023. One accelerated analysis 700,000 times to help engineers with the planning and operation of carbon capture and storage,40 while the other ran FourCastNet (a weather forecasting model that provides accurate short- to medium-range global predictions at 0.25 resolution) with huge speedups and accuracy improvements in predicting an extreme weather event.41

Another digital twin project, Destination Earth (or Destin-E), was funded by the European Union as part of a digital overhaul and Green Deal initiative “to monitor and predict environmental change and human impact in support of sustainable development.” Destin-E was to be constituted from “more than big data atlases,” combining new models of the Earth system enabled by high-performance computing “to close substantial and recalcitrant gaps in our ability to look into the future.”42

Outlining the desired final product, computer scientist Peter Bauer and colleagues explained that “a digital twin of Earth would fully integrate observations with an Earth system model and human subsystems for, for example, water, food and energy resource management, to assess the impacts on, and influences from, these subsystems on Earth system trajectories.” A crucial aspect of the project was to bridge the gap between climate models that monitor coupled components of the overall planetary system over decades with weather models that run across far smaller proximities and timescales: “The twin would allow us to assess possible changes and their causes consistently across local and global spatial scales and over timescales stretching from days to decades.”43

The overview effect—the “cognitive shift” reported by some astronauts after viewing Earth from space—is digitized to become a “single source of truth” in SYNTH3D, VBS4, Destin-E, and Earth-2. As a marketing strategy, it teases mastery over the planetary terrain, but also leads us toward the larger context in which anthropogenic and climate effects are felt. The planet is no mere globe but an evolving multiscalar causal web where genes, cells, organisms, social groups, buildings, cities, ecosystems, and states interact.

These digital twins harbor echoes of Buckminster Fuller’s World Peace Game from 1961, an educational simulation devised to be war gaming’s more noble spirited cousin. They present us, in software form, with the conditions Frank et al. set out as indicators of an emerging planetary intelligence.44 Only, unlike past models, digital twins are meant to establish an unbreakable causal connection between simulator and simulated—be they reinsurance calculations that change the course of real estate development, or testing preferable crops for a shifting terroir. Rather than a simulation scrabbling to process disturbances after the fact, the claim is that we can foresee them and automate the necessary response.

4.5 What If We Could Simulate Everything?

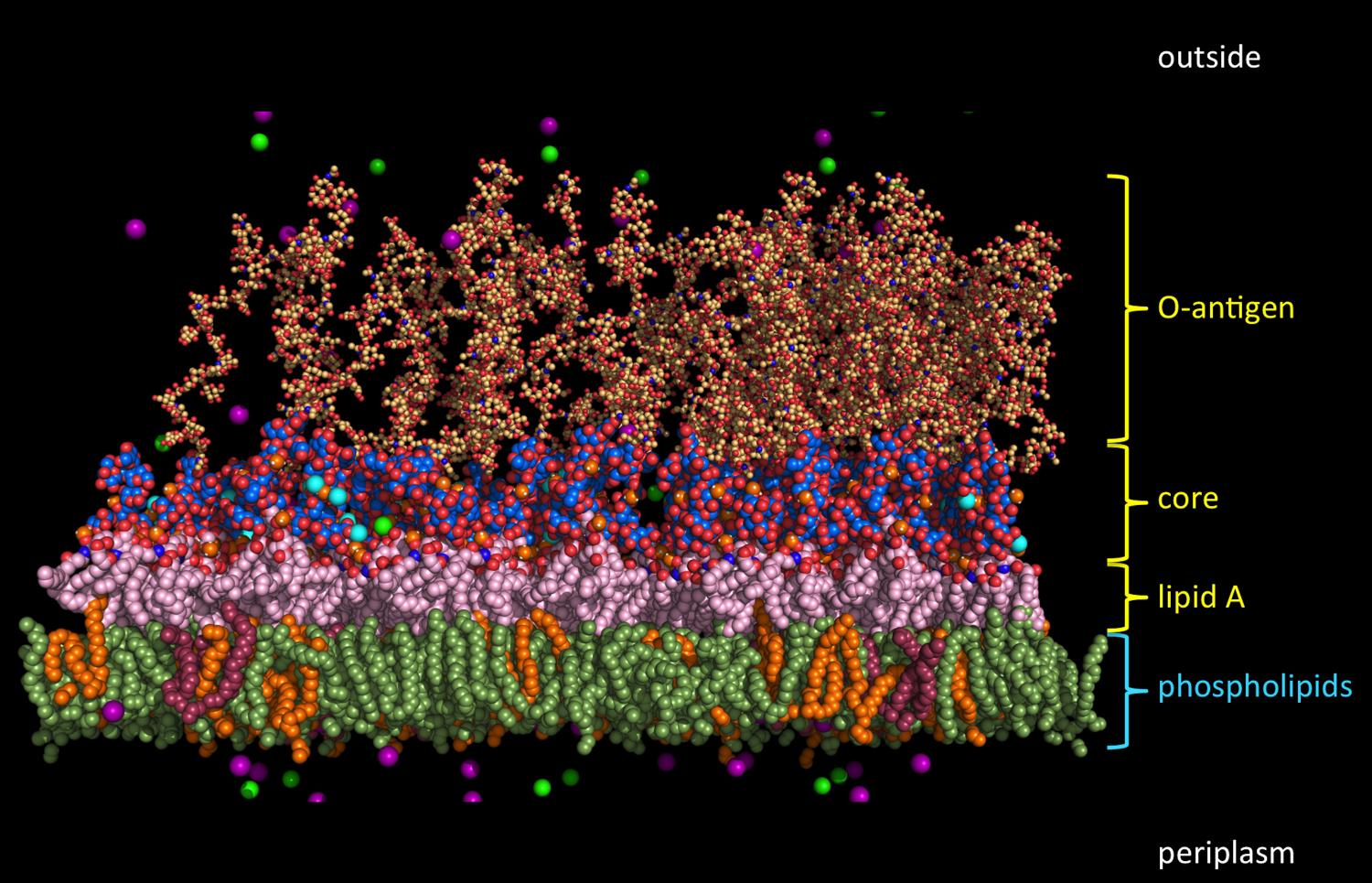

Before the 2010s it was assumed that the smallest, softest, and smartest parts of reality would forever remain unsimulated. Among the sciences it was biology , with its reputation for operating “close to the ground,” that was expected to be the firmest hold-out: too complex for the mathematics that powered physics (whose revelations nobody expects to touch) or the synthetic interventions that characterized the discovery process in chemistry. Living matter, by contrast, could only be known through arduous and expensive in vitro experimentation.

In Reconstructing Reality, Morrison observes the mathematization of biology quite literally at the bleeding edge. She gives examples that include anticipating wound closures based on partial differential equations, free boundary problems used to predict cancer tumor growth, and models of lung responses to infection from which pharmacological treatments are derived. These practices were already taking place before the advent of new techniques either accelerated by or enabled by the computational architecture of GPUs.

Still thornier debates over the ethics and efficacy of modeling in economics and the social sciences spring from the mistaken assumption that models ought to be lifelike in order to provide meaningful information. Yet even small, finite, or idealized models can yield valuable insights about how populations react to new legal or fiscal arrangements. This is not merely simulation as a shadow of reality but a structure for prediction and intervention, especially with dynamic and unstable groups—from cells to societies.

“We try to simulate tissues and organs, how they grow, how their development works,” says James Sharpe of the Barcelona-based European Molecular Biology Laboratory, to explain how the availability of affordable GPUs transformed the organization’s practices.45 By dividing the cell populations in a given tissue into smaller groups of cells and sending those computations to GPUs, tasks like microscopy image analysis can be completed in a fraction of the time they once took.

The boundaries of what can and cannot be simulated with existing technology and expertise is always changing. Finalists in the 2023 Gordon Bell Prize, hosted by the Association of Computer Machinery, included a simulation of a jet engine based on a decade-old NASA challenge, a simulation of quantum particles based on density function theory and the quantum many-body problem , as well as a simulation of a nuclear reactor complete with radiation transport plus heat and fluid dynamics inside the core. Along with simulations of existing phenomena we hope to better understand, simulations are absolutely integral to the technologies we aspire to build. They take center stage in Pasteur’s quadrant, where fundamental discoveries are sought in areas of immediate use.

In early 2022, researchers from Google DeepMind ran a trained neural network to stabilize the plasma in an experimental tokamak fusion reactor in Lausanne, Switzerland. The AI was broken into two parts: one which ran on a simulation of the reactor and learned to control it, and a smaller, faster-running network that ran the reactor itself. The AI processed ninety different measurements 10,000 times per second, adjusting the voltage in nineteen large magnets to hold the plasma in place. Although the system ran for just two seconds, which is all that the experimental mechanism is capable of so far, the AI adjusted magnets in ways that humans had not tried before.

Evan Schneider of the University of Pittsburgh has used the Frontier system to run simulations of how our galaxy has evolved over time—how gasses flow in and out of the Milky Way, coalescing into stars and ultimately dissipating as those stars explode. The resolution of these galactic simulations, which included not only large-scale properties of the galaxy at 100,000 light-years across but also properties of the supernovas at about ten light-years across, was high enough to zoom in on individual exploding stars. “It would be analogous to creating a physically accurate model of a can of beer along with the individual yeast cells within it, and the interactions at each scale in between,” Schneider told journalists.46

The ability to model multi-scale phenomena and their cause–effect mechanisms had once been a major hurdle. But a new generation of researchers pioneering “simulation intelligence” brought about a new paradigm of guided technological development, the fundamentals of which would be carried forward to the next generation of hardware design and ultimately to the Infinity Mirror.47

The researchers at Pasteur-ISI, for example, led by rocket scientist-turned AI engineer Alexander Lavin, developed simulation test beds as in-silico playgrounds for human experts and AI agents to design and experiment. The team also has its own digital twin Earth project in collaboration with NASA’s Frontier Development Lab: “Existing scientific methods are deductive and objective-based. The next generation of science, brought about by innovations in simulation, AI/ML, and accelerated computing, can be inductive and open-ended; human-machine teams will efficiently explore an exponentially larger space of hypotheses and solutions, in all domains at all scales.”48