-

The appearance of a frightening imitation is a common trope in cinema. Christopher Nolan’s The Prestige (2006), Denis Villeneuve’s Enemy (2013), and Richard Ayoade’s The Double (2013) weave contemporary concerns about identity, the unconscious, and the limits of perception into classic storylines inspired by Nikolai Gogol, Edgar Allan Poe, Ernst Theodor Amadeus Hoffmann, and others. However, the on-screen inheritor of Platonic–Cartesian skepticism may be the firmly established genre of simulation cinema—most obviously Rainer Werner Fassbinder’s Welt am Draht (1973), which inspired The Matrix (1999), without which it would be hard to imagine Westworld (2016–2022) or Ready Player One (2018). Each is attuned to the possibilities of inhabitable computer simulations and the philosophy of universal computationalism, as popularized in Nick Bostrom’s 2003 paper, “Are You Living in a Computer Simulation?” (243–55). The paper assures us “it is not an essential property of consciousness that it is implemented on carbon-based biological neural networks inside a cranium: silicon-based processors inside a computer could in principle do the trick as well.”

The appearance of a frightening imitation is a common trope in cinema. Christopher Nolan’s The Prestige (2006), Denis Villeneuve’s Enemy (2013), and Richard Ayoade’s The Double (2013) weave contemporary concerns about identity, the unconscious, and the limits of perception into classic storylines inspired by Nikolai Gogol, Edgar Allan Poe, Ernst Theodor Amadeus Hoffmann, and others. However, the on-screen inheritor of Platonic–Cartesian skepticism may be the firmly established genre of simulation cinema—most obviously Rainer Werner Fassbinder’s Welt am Draht (1973), which inspired The Matrix (1999), without which it would be hard to imagine Westworld (2016–2022) or Ready Player One (2018). Each is attuned to the possibilities of inhabitable computer simulations and the philosophy of universal computationalism, as popularized in Nick Bostrom’s 2003 paper, “Are You Living in a Computer Simulation?” (243–55). The paper assures us “it is not an essential property of consciousness that it is implemented on carbon-based biological neural networks inside a cranium: silicon-based processors inside a computer could in principle do the trick as well.” -

Bogost, Unit Operations.

-

Vallverdú J (2014) What are simulations? An epistemological approach. Procedia Technology 13, 6-15.

-

In the 1760s, French naturalist and encyclopedia-maker Georges-Louis Leclerc, Comte de Buffon, started a series of experiments heating iron balls until they glowed white, then measuring how long they took to cool to room temperature. He didn’t use a thermometer for these experiments, relying instead on touch, yet his results were surprisingly accurate. Next, he heated and cooled different materials including sandstone, glass, and various rocks. He did so hoping to learn the age of Earth. Buffon believed that Earth indeed had a beginning, and it was originally a molten mass too hot for any living creature. It had to cool before it could support life. In 1778, he published Les epoques de la nature, asserting that Earth was 74,832 years old, a 68,000-year increase on the Biblical chronology that had held since the Middle Ages.

In the 1760s, French naturalist and encyclopedia-maker Georges-Louis Leclerc, Comte de Buffon, started a series of experiments heating iron balls until they glowed white, then measuring how long they took to cool to room temperature. He didn’t use a thermometer for these experiments, relying instead on touch, yet his results were surprisingly accurate. Next, he heated and cooled different materials including sandstone, glass, and various rocks. He did so hoping to learn the age of Earth. Buffon believed that Earth indeed had a beginning, and it was originally a molten mass too hot for any living creature. It had to cool before it could support life. In 1778, he published Les epoques de la nature, asserting that Earth was 74,832 years old, a 68,000-year increase on the Biblical chronology that had held since the Middle Ages. -

In Simulation and Similarity (2013), 6, philosopher Michael Weisberg explains: “Roughly speaking, concrete models are physical objects whose physical properties can potentially stand in representational relationships with real-world phenomena. Mathematical models are abstract structures whose properties can potentially stand in relation to mathematical representations of phenomena. Computational models are sets of procedures that can potentially stand in relation to a computational description of the behavior of a system.”

-

The use of the planet as the relevant scale for analysis in the context of an astrobiology paper is twofold. First, it enables the abstraction of historical dynamics on Earth that may prove to be generic, a useful benchmark for understanding evolutionary principles elsewhere in the universe. Second, it means recognizing that small tweaks in the DNA of microscopic organisms can cause changes that cascade upward and become significant enough in scale to change the weather. New states are created by upward causation, which in turn constrain and shape behaviors at lower levels. As such, the idea of a planetary boundary is neither a single ultimate region of activity nor a hierarchy of scales in any conventional sense. Instead we have a prismatic spheroid where both less and more are different. For more, see the original paper by Frank et al., “Intelligence,” 47–61.

-

Frank et al., “Anthropocene Generalized,” 503–18. The five classes of planet are an update of astrophysicist Nikolai Kardashev’s energy-harvesting measuring stick, the Kardashev scale, a rubric for classifying intelligent civilizations according to their ability to tap into the supplies of their planet, star, or galaxy of origin. Later adaptations of the Kardashev scale have ranked other features such as capacity to compute, to bounce back from natural disasters, or to engineer at ever smaller scales, from objects to genes and molecules, atoms and nuclei, quarks and leptons, and ultimately space and time.

-

The paper borrows the concept of “evolutionary transitions” from Eörs Szathmáry and John Maynard Smith’s The Major Transitions in Evolution (1995), which proposes a series of “changes in the way information is stored and transmitted” to account for an apparent increase in biological complexity in evolutionary lineages.

The paper borrows the concept of “evolutionary transitions” from Eörs Szathmáry and John Maynard Smith’s The Major Transitions in Evolution (1995), which proposes a series of “changes in the way information is stored and transmitted” to account for an apparent increase in biological complexity in evolutionary lineages. -

Haff, “Humans and Technology,” 126–36.

-

“In living systems, information always carries a semantic aspect—its meaning—even if it is something as simple as the direction of a nutrient gradient in chemo-taxis.” Frank et al., “Intelligence,” 6.

-

Clark, Experience Machine. Simulation technologies that artificialize this dynamic conform to what Richard Dawkins described as an “extended phenotype,” extending the output of genes beyond protein synthesis or eye color to the changes they make to their environment.

Clark, Experience Machine. Simulation technologies that artificialize this dynamic conform to what Richard Dawkins described as an “extended phenotype,” extending the output of genes beyond protein synthesis or eye color to the changes they make to their environment. -

The anecdotal parallel to this line of neuroscientific inquiry would be the “method of loci” or “memory palace,” placing objects, words or symbols into a familiar environment, such as one’s home, then mentally moving through that environment to recollect the new information. In his book Moonwalking with Einstein (2011), journalist Joshua Foer recounts his victory at the 2006 USA Memory Championship at which he memorized fifty-two cards in one minute and forty seconds despite having no real aptitude for remembering (the book opens with him forgetting his keys a year earlier). This suggests that learning new material is made easier by attaching information to an existing reference frame, but also that thinking itself evolves out of sensory–motor interactions between body and world.

The anecdotal parallel to this line of neuroscientific inquiry would be the “method of loci” or “memory palace,” placing objects, words or symbols into a familiar environment, such as one’s home, then mentally moving through that environment to recollect the new information. In his book Moonwalking with Einstein (2011), journalist Joshua Foer recounts his victory at the 2006 USA Memory Championship at which he memorized fifty-two cards in one minute and forty seconds despite having no real aptitude for remembering (the book opens with him forgetting his keys a year earlier). This suggests that learning new material is made easier by attaching information to an existing reference frame, but also that thinking itself evolves out of sensory–motor interactions between body and world. -

Hawkins, A Thousand Brains, 101.

-

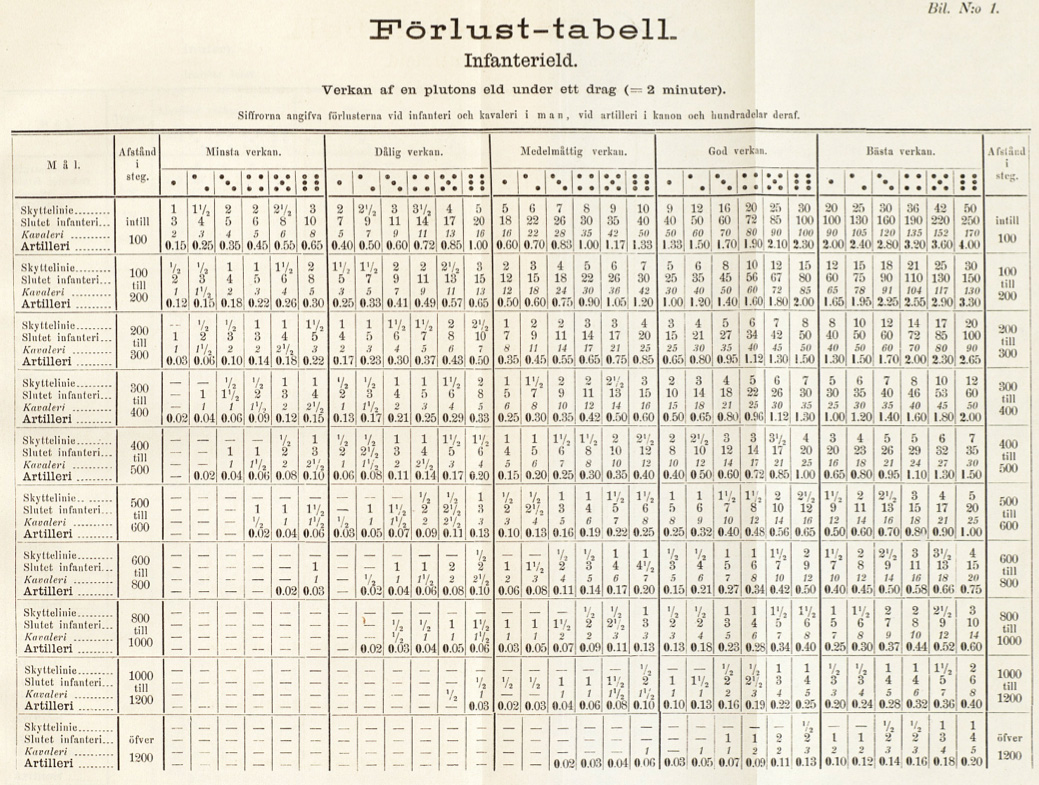

IMBUILD001—TABLES AND MATRICES: Each “IMBUILD” represents an essential precursor to the Infinity Mirror, beginning here in the early days of simulation technology. In the case of Kriegsspiel, a set table is used to calculate combat outcomes. The game’s map features different types of terrain—forests, mountains, rivers—each of which influence the effectiveness of a battalion. A unit’s artillery, strengths, and relative position are disturbed by rolling dice, introducing a stochastic element to mimic the uncertainty of war. The game’s umpire maintains a delay between orders being given and their implementation, recreating the communication issues of nineteenth-century warfare. In a sense, the umpire represents the “human computer” of the age: employed to carry out numerical procedures without deviating; producing and then working with mathematical tables for the insurance industry, agriculture, science, industry, and government. In the Netherlands, a (human-run) computer simulation was used to predict the effects of building the Afsluitdijk between 1927 and 1932. The majority of calculations related to the Manhattan project were carried out by uncredited “computers.”

IMBUILD001—TABLES AND MATRICES: Each “IMBUILD” represents an essential precursor to the Infinity Mirror, beginning here in the early days of simulation technology. In the case of Kriegsspiel, a set table is used to calculate combat outcomes. The game’s map features different types of terrain—forests, mountains, rivers—each of which influence the effectiveness of a battalion. A unit’s artillery, strengths, and relative position are disturbed by rolling dice, introducing a stochastic element to mimic the uncertainty of war. The game’s umpire maintains a delay between orders being given and their implementation, recreating the communication issues of nineteenth-century warfare. In a sense, the umpire represents the “human computer” of the age: employed to carry out numerical procedures without deviating; producing and then working with mathematical tables for the insurance industry, agriculture, science, industry, and government. In the Netherlands, a (human-run) computer simulation was used to predict the effects of building the Afsluitdijk between 1927 and 1932. The majority of calculations related to the Manhattan project were carried out by uncredited “computers.” -

IMBUILD002—MATHEMATICAL FORMULAS: Calculations that approximate in numbers, from first principles, the behavior of objects, matter, and agents, are the foundation of the study of physics. They are not representations of any deeper reality, however, but extensions of intelligence’s capacity to know its environment, no less real than any other type of thought.

-

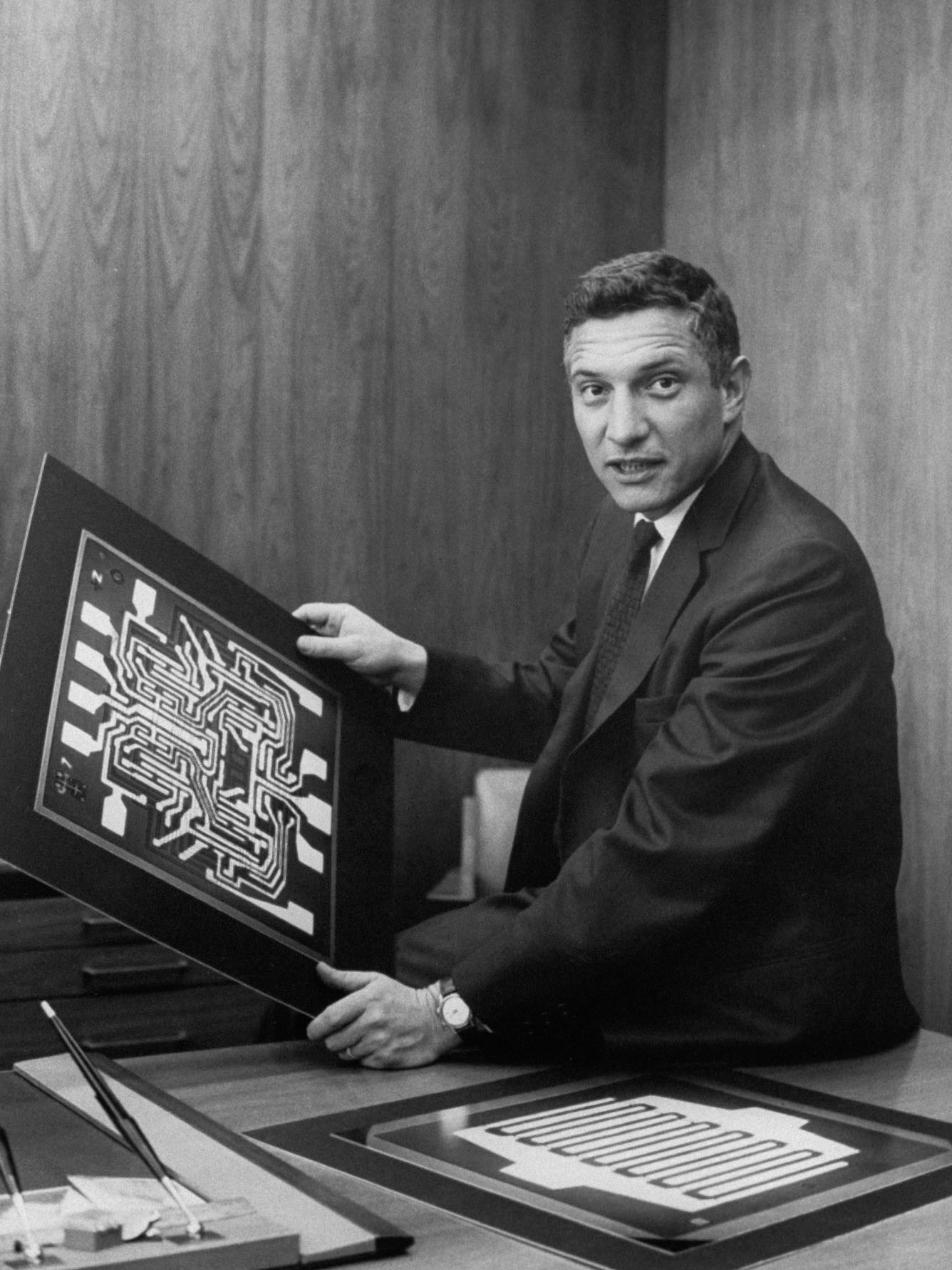

IMBUILD003—TRANSISTORS: The first Turing-complete, programmable electronic Numerical Integrator and Computer (

IMBUILD003—TRANSISTORS: The first Turing-complete, programmable electronic Numerical Integrator and Computer (ENIAC) was finished in 1945. The 27-ton machine was intended to calculate ballistic trajectories at far higher resolution than was possible using tables and human computers, though its actual first program simulated the potential for a fusion-powered, thermonuclear bomb. TheENIAC’s vacuum tubes and crystal diodes were soon outpaced by the advent of point-contact, metal-oxide semiconductor field-effect transistor (silicone oxidation) and mesa transistor, which involved covering a block of germanium—another semiconductor material—with a drop of wax. The engineer Jay Lathrop, then with the US Army’s Diamond Ordnance Fuze Laboratories in the 1950s, needed a transistor that would fit inside a mortar shell. The wax method was difficult to miniaturize so Lathrop and lab partner James Nall devised a method that involved turning microscope optics upside down. As Chris Miller, author of Chip War (2023), writes in Miller, “Chip Patterning Machines”: -

PEO STRI, “History.”

-

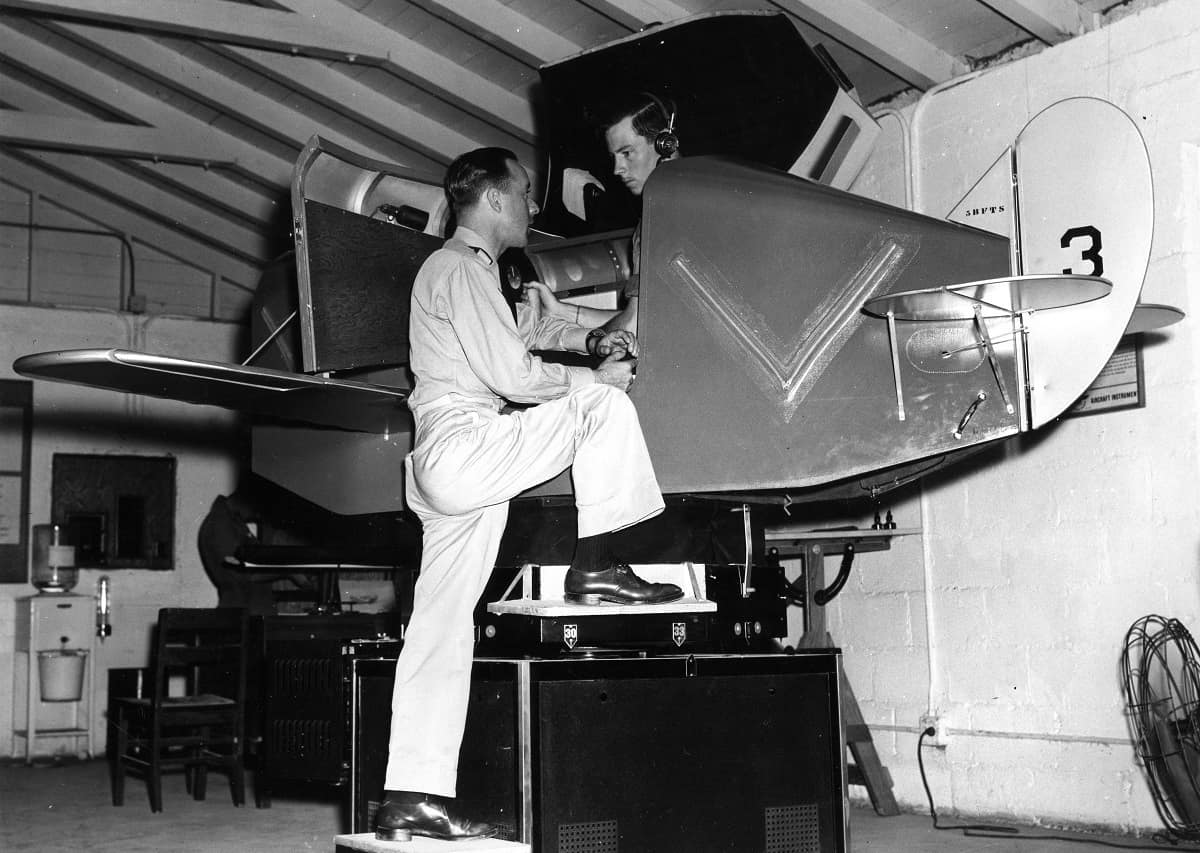

IMBUILD004—HYBRID SYSTEMS: The first electronic flight simulator was built by Edwin Link in 1929, a chunky blue aircraft frame with an engine underneath that used pneumatic bellows to control pitch and roll and a small motor-driven device to produce turbulence. Link built the “Link Trainer” because his father, a piano maker, could not afford flight school for his son, yet the high number of casualties due to pilot error at the time led the US Air Corps to buy six of the devices for $3,500 each. Many of the pilots heading into World War II were trained on them. The first computer image generation systems for simulation were produced by General Electric for the space program. Early versions produced a patterned “ground plane” image while later systems were able to generate images of 3D objects, enabling continuous viewing of 180 degrees. The interplay of calculated scenery in a loop with the onboard instruments and the human between them represents a specific type of hybrid simulation that incorporates physical or “concrete” testing features with computer modeling, and in this case a human-in-the-loop. For more, see the presentation “Brief History of Flight Simulation” by Ray L. Page, a former manager at Qantas.

IMBUILD004—HYBRID SYSTEMS: The first electronic flight simulator was built by Edwin Link in 1929, a chunky blue aircraft frame with an engine underneath that used pneumatic bellows to control pitch and roll and a small motor-driven device to produce turbulence. Link built the “Link Trainer” because his father, a piano maker, could not afford flight school for his son, yet the high number of casualties due to pilot error at the time led the US Air Corps to buy six of the devices for $3,500 each. Many of the pilots heading into World War II were trained on them. The first computer image generation systems for simulation were produced by General Electric for the space program. Early versions produced a patterned “ground plane” image while later systems were able to generate images of 3D objects, enabling continuous viewing of 180 degrees. The interplay of calculated scenery in a loop with the onboard instruments and the human between them represents a specific type of hybrid simulation that incorporates physical or “concrete” testing features with computer modeling, and in this case a human-in-the-loop. For more, see the presentation “Brief History of Flight Simulation” by Ray L. Page, a former manager at Qantas. -

Edery et al., “Changing the Game,” 141.

-

Flatlife, “Evolution of Unreal Engine [1995-2023].”

-

America’s Army inspired tactics that soon became widespread, from crowd-sourcing stock market picks to recruitment for air traffic controllers (Federal Aviation Administration, “Level Up Your Career”). The game incorporated real-world data based on the latest armaments, vehicles, medical training (though this module was optional), and even set up disciplinary protocols for poor behavior. It cost $7 million from a recruitment budget of $3 billion and was continually developed with an additional $3 million every year until the series was discontinued in 2022. According to Colonel Wardynski, the game generated interest from other agencies, which resulted in the development of a training version for internal government use only. In a 2005 blog post, Scott Miller, the game developer and publisher who created Duke Nukem in 1991 and worked on America’s Army in the early 2000s, wrote about furious disagreements and a run of resignations after the moonshot project turned out to be a runaway success and its history was gradually rewritten: “When the project was just a fly-by-night rogue mission, no one paid much attention to it. Once the Army figured out that the game was the single most successful marketing campaign they’d ever launched (at 1/3rd of 1% of their annual advertising budget), we suddenly came under a very big microscope ... The Army is basically clueless when it comes to making games and they don’t know how to treat people, especially game developers. They had an A-level team, but I honestly don’t see them building another one (particularly since they weren’t the ones who built the first one). It’ll be interesting to watch what happens though. Essentially, there was a magic couple of years there where two totally alien cultures came together to do something cool.”

-

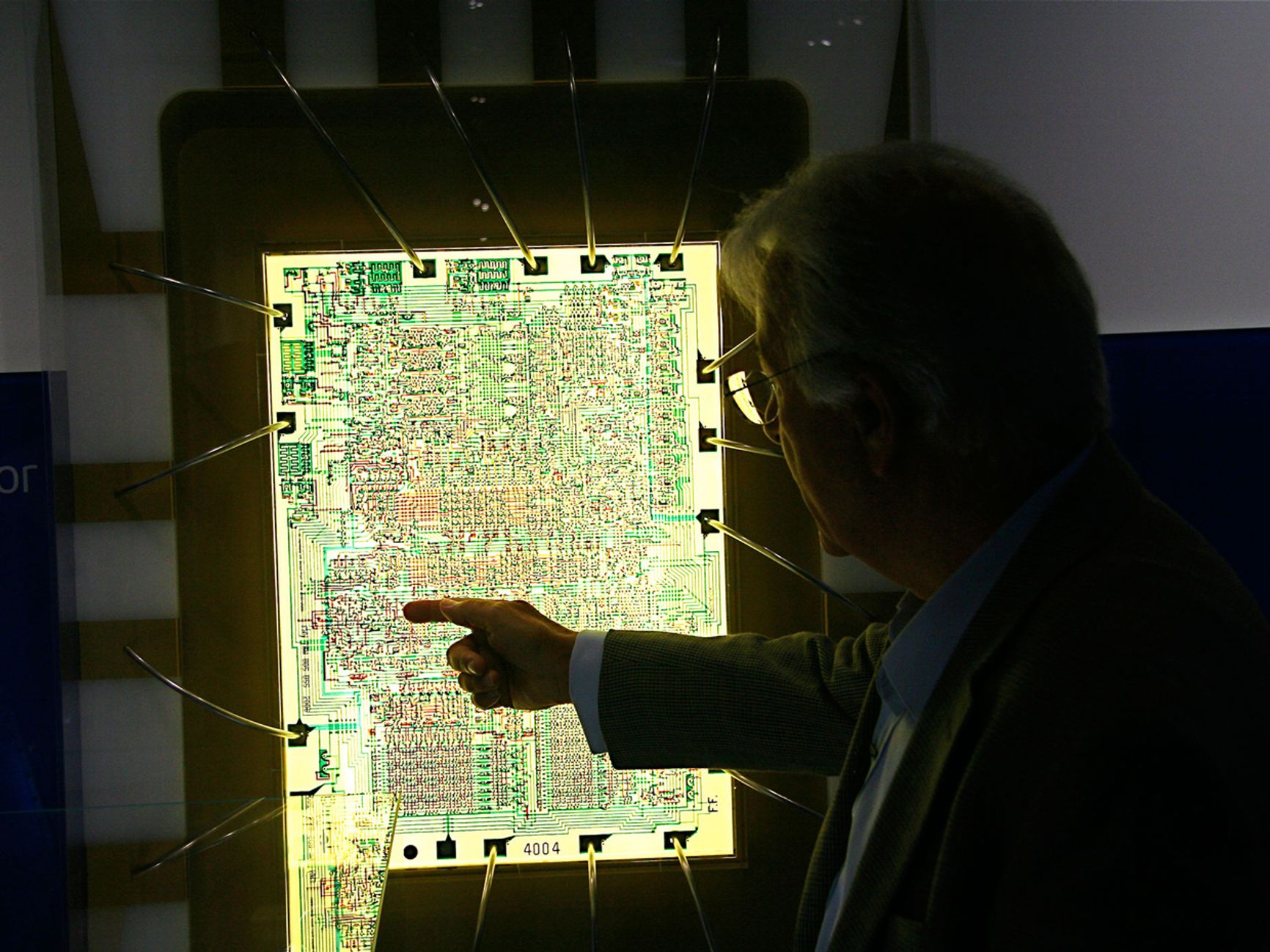

IMBUILD005—INTEL 4004: The first microprocessor, the 4-bit Intel 4004

IMBUILD005—INTEL 4004: The first microprocessor, the 4-bit Intel 4004 CPU, was released to the market in 1971. It had been an under-resourced side project at Intel, whose primary concern at the time was producing memory chips. The company lacked specialists capable of patterning the logic gates to be imprinted on silicon. In the end they hired Italian-American engineer Federico Faggin who left his initials, “F. F.,” on the design, an artist’s signature transferred into mass production. -

IMBUILD006—PICTURE PROCESSING UNIT: The Japanese electronics giant Ricoh developed what it described as a “video co-processor” in the early 1980s and named it the picture processing unit (

IMBUILD006—PICTURE PROCESSING UNIT: The Japanese electronics giant Ricoh developed what it described as a “video co-processor” in the early 1980s and named it the picture processing unit (PPU), later incorporated in Nintendo’s Famicom and the 1985 Nintendo Entertainment System. Up until that point the entire command center had been theCPU—essentially the brain of the machine, the intellectual property-controlled and most expensive part of the product. The major realization for Nintendo was that their system didn’t need to be a universal machine that could run any kind of software. Nobody was planning to run payroll or inventory management on it. The device was to be used for gaming and gaming alone, meaning they could pair a cheaperCPUwith a dedicated parallel chip for processing the background scenery and sprites (moving characters), which it did with the 8-bit, 256×240 pixelPPU. -

IMBUILD007—GEFORCE 8800GTX: The 128 processing streams (or cores) inside NVIDIA’s GeForce 8800GTX allowed graphical tasks to be parallelized at greater efficiency than had been possible before. This enabled greater and more immersive gameplay but also opened the door to general-purpose

IMBUILD007—GEFORCE 8800GTX: The 128 processing streams (or cores) inside NVIDIA’s GeForce 8800GTX allowed graphical tasks to be parallelized at greater efficiency than had been possible before. This enabled greater and more immersive gameplay but also opened the door to general-purpose GPUcomputing using graphics cards. A new paradigm in simulation capacity had emerged to support the insatiable hunger for better graphics and in-game physics, and was gradually appreciated and absorbed into other fields, mostly during the 2010s. Gaming produced the core technology behind complex modeling, advanced plasma physics, and machine learning, but also Bitcoin mining. In the early 2020s, the bull market and subsequent crash of cryptocurrencies, the expansion of remote work, factory shutdowns across Asia, and PC gamers trapped indoors due to the COVID-19 pandemic produced a worldwide shortage in what we might think of as a “simulation crisis.” It meant the widespread understanding that an expanding virtual realm is not without cost—the energy and resources required to keep these worlds operational, but also the immense up-front investment to develop and manufacture the hardware on which they run. -

Ray tracing is a means of calculating light transport plotted across a grid system whose history dates back to the German draftsman Albrecht Dürer. The necessary computations can be expensive, which is why NVIDIA’s RTX (the

Ray tracing is a means of calculating light transport plotted across a grid system whose history dates back to the German draftsman Albrecht Dürer. The necessary computations can be expensive, which is why NVIDIA’s RTX (the RTis for “ray tracing”)GPUfeatures dedicated ray tracing cores. Unreal Engine 5, released in 2020, incorporates Lumen, a dynamic global illumination and reflections system designed for next-generation consoles based on ray tracing to produce photorealistic effects. Deep-learning super sampling, meanwhile, uses a dedicated deep-learning chip to up-scale resolution while only rendering them at a fraction of the resolution perceived by the viewer. -

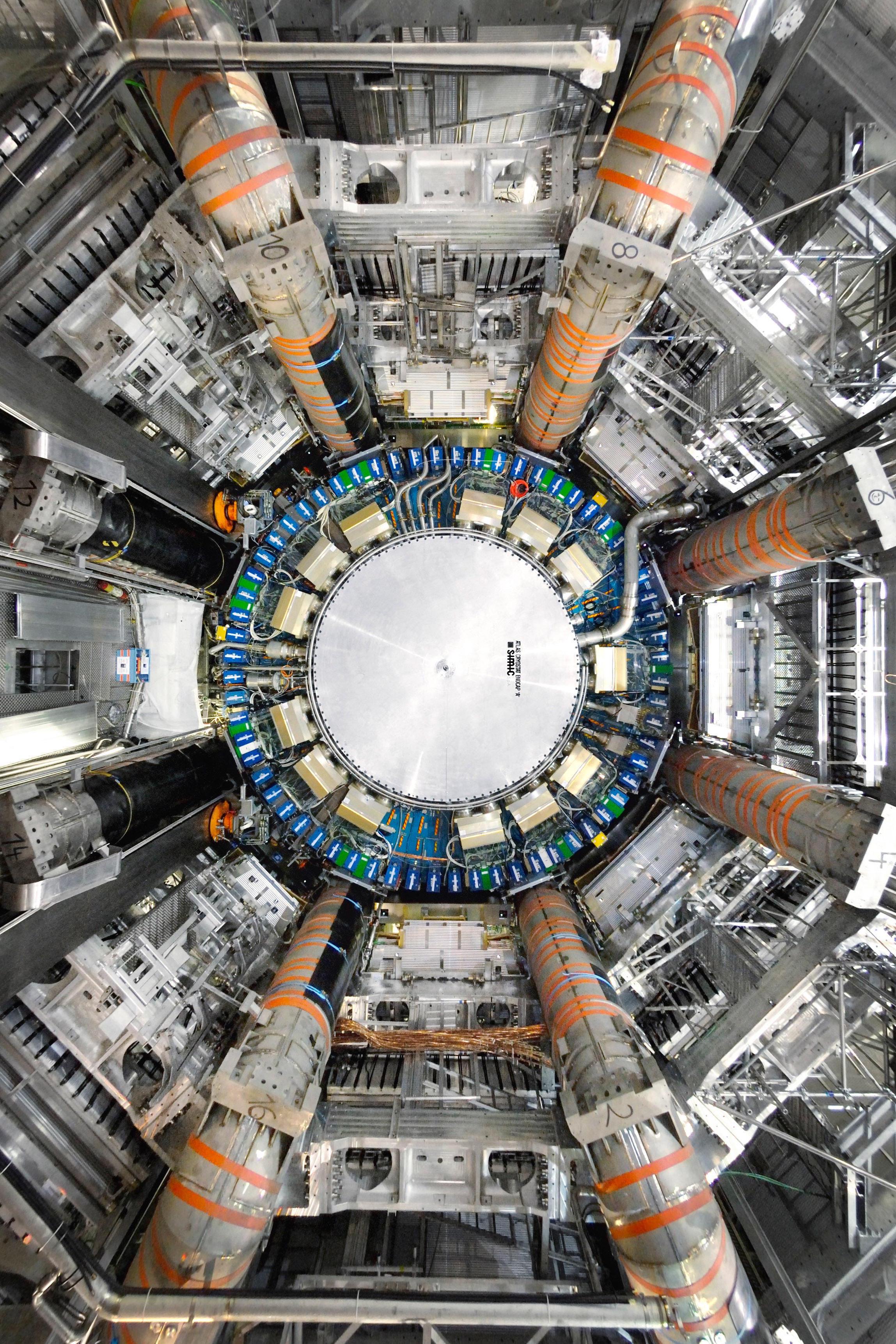

In Reconstructing Reality, Morrison uses the example of the Large Hadron Collider and the discovery of the long-theorized Higgs particle in Peter Higgs’s lifetime to explain how simulations contribute to experimental knowledge. She notes that the detected five-sigma signal (a result with a 0.00003 percent likelihood of being a statistical fluctuation) could only have been made through the use of simulation data—never mind the vast number of simulations that would have been required to produce the machine itself.

In Reconstructing Reality, Morrison uses the example of the Large Hadron Collider and the discovery of the long-theorized Higgs particle in Peter Higgs’s lifetime to explain how simulations contribute to experimental knowledge. She notes that the detected five-sigma signal (a result with a 0.00003 percent likelihood of being a statistical fluctuation) could only have been made through the use of simulation data—never mind the vast number of simulations that would have been required to produce the machine itself. -

PEO STRI, “History.”

-

PEO STRI, “One World Terrain.”

-

Bohemia Interactive, “VBS4.”

-

In the same interview, Morrison described some of the work partner militaries had been doing to simulate future battle spaces, including a “snake robot” for the Australian Defence Force, who started conducting trials using the VBS series since 2003: “It was a robot snake that laid explosive devices, and each device was a part of the snake” (for the full interview, see Stuart, “How Simulation Games Prepare,” 2019).

-

BAE Systems, “BAE Systems Completes Acquisition.”

-

The 2023 Hummer EV used Epic Games’s Unreal Engine to visualize a digital twin of itself on its 13.4-inch dashboard display. The interface was designed by Perception, the creative studio responsible for inventing the technology in Marvel movies, and bears no small resemblance to the inside of Iron Man’s helmet. As well as running real-time diagnostics on the vehicle, users are able to customize the skins of the vehicle on-screen and to reimagine the terrain as something quite different than the suburban roads they may be driving on—such as Mars, for example.

The 2023 Hummer EV used Epic Games’s Unreal Engine to visualize a digital twin of itself on its 13.4-inch dashboard display. The interface was designed by Perception, the creative studio responsible for inventing the technology in Marvel movies, and bears no small resemblance to the inside of Iron Man’s helmet. As well as running real-time diagnostics on the vehicle, users are able to customize the skins of the vehicle on-screen and to reimagine the terrain as something quite different than the suburban roads they may be driving on—such as Mars, for example. -

Pollok et al., “UnrealGT.”

-

Hopfstock et al., “Building a Digital Twin.”

-

Simulations are places where multiple competing interests overlap—whether that be challenger theories in science, wants and needs in social models, or the competitive and collaborative aspects of game worlds. Serious games are instances in which the interests of players are pitted against one another to arrive at useful outcomes, such as when volunteers were asked to play a farming simulator to develop protocols against African Swine Fever (UVM, “Video Games”), or to run operations in a shipping port as a way to develop greener practices (Deltares, “Port of the Future”). Sometimes the dynamics are unplanned. They may amount to case studies of governance, as in Kio-tv’s “EvE Online,” which can host 20,000 participants across 8,000 solar systems engaged in war and commerce. Loot systems like Dragon Kill Points may stand in for emergent political economy to solve redistribution problems in massively multiplayer online games like EverQuest or World of Warcraft (see Citarella, “DKP”).

Simulations are places where multiple competing interests overlap—whether that be challenger theories in science, wants and needs in social models, or the competitive and collaborative aspects of game worlds. Serious games are instances in which the interests of players are pitted against one another to arrive at useful outcomes, such as when volunteers were asked to play a farming simulator to develop protocols against African Swine Fever (UVM, “Video Games”), or to run operations in a shipping port as a way to develop greener practices (Deltares, “Port of the Future”). Sometimes the dynamics are unplanned. They may amount to case studies of governance, as in Kio-tv’s “EvE Online,” which can host 20,000 participants across 8,000 solar systems engaged in war and commerce. Loot systems like Dragon Kill Points may stand in for emergent political economy to solve redistribution problems in massively multiplayer online games like EverQuest or World of Warcraft (see Citarella, “DKP”). -

IMBUILD008—NVIDIA A100 AND SUNWAY SW2610: The months shortly after the public release of GPT-3.5 were characterized both approvingly and with great reservation as an “AI arms race.” Both LLMs and generative AI were not only being evaluated for possible application in warfare but they were also being considered more broadly in all fields where intelligence was at work—from script writing to cooking. OpenAI’s GPT models were built in Microsoft servers running cabinets filled with NVIDIA A100 Tensor Core GPUs, sold on boards featuring eight individual units at around $200,000 each.

IMBUILD008—NVIDIA A100 AND SUNWAY SW2610: The months shortly after the public release of GPT-3.5 were characterized both approvingly and with great reservation as an “AI arms race.” Both LLMs and generative AI were not only being evaluated for possible application in warfare but they were also being considered more broadly in all fields where intelligence was at work—from script writing to cooking. OpenAI’s GPT models were built in Microsoft servers running cabinets filled with NVIDIA A100 Tensor Core GPUs, sold on boards featuring eight individual units at around $200,000 each. -

Timothy Prickett Morgan, “Climate Simulation.”

-

Dean Takahashi, “Nvidia CEO.”

-

Jensen Huang, “NVIDIA to Build Earth-2.”

-

NVIDIA, “Accelerating Carbon Capture.”

-

NVIDIA, “Predicting Extreme Weather.”

-

Bauer et al., “Digital Twin,” 80–83.

-

Bauer et al., “Digital Twin,” 81. IMBUILD009—EXTREME SILICON COMPUTING: In “The Digital Revolution of Earth-System Science,” Bauer et al. note serious concern within the community about the delivery of reliable weather and climate predictions “in the post-Moore/Dennard era,” and propose a novel infrastructure “that is scalable and more adaptable to future, yet unknown computing architectures.”

Bauer et al., “Digital Twin,” 81. IMBUILD009—EXTREME SILICON COMPUTING: In “The Digital Revolution of Earth-System Science,” Bauer et al. note serious concern within the community about the delivery of reliable weather and climate predictions “in the post-Moore/Dennard era,” and propose a novel infrastructure “that is scalable and more adaptable to future, yet unknown computing architectures.” -

Frank et al., “Intelligence,” 47–61. They are not only a clear example of “an emergent complex adaptive system composed of multi-layered networks of semantic information flows,” 55, but also the way that self-representation is a fundamental first step toward prolonged “going-on,” 53. Much as groups of neurons within cortical columns create thousands of competing models (brains being one demonstration of intelligence among many), Earth is creating ever-more sophisticated models of itself which, like all models, are tools for action and greater understanding.

-

Berta Carreño and Laura Howes, “The Rise of GPU Computing.”

-

Sophia Chen, “The World’s Fastest Supercomputers.”

-

The “simulation intelligence” stack is outlined in a paper with a conspicuously high-profile line-up co-authored by figures from across industry (Intel, Qualcomm AI) and research (Oxford University, Alan Turing Institute, and the Santa Fe Institute). It includes multi-physics and multi-scale modeling, surrogate modeling and emulation, simulation-based inference, causal modeling and inference, agent-based modeling, probabilistic programming, differentiable programming, open-ended optimization and machine programming. See Lavin et al., “Simulation Intelligence”, arXiv.

-

Pasteur-ISI, “Technology: What If Simulators ...”

-

Fueschle et al., “Single-Atom Transistor,” 242–6.

-

This was achieved by working on a combination of three factors: the wavelength of the light; the k1 coefficient, which stands in for a range of process-related factors; and the numerical aperture, a measure of the range of angles over which the system can emit light. The critical dimension—that is, the smallest possible feature size you can print with a certain photolithography-exposure tool—is proportional to the wavelength of light divided by the numerical aperture of the optics. To achieve smaller critical dimensions, engineers must use shorter light wavelengths, or larger numerical apertures, or a combination of the two.

-

As Jan van Schoot explained, EUV involves “hitting molten tin droplets in mid flight with a powerful CO2 laser. The laser vaporizes the tin into a plasma, emitting a spectrum of photonic energy. From this spectrum, the EUV optics harvest the required 13.5-nm wavelength and direct it through a series of mirrors before it is reflected off a patterned mask to project that pattern onto the wafer. And all of this must be done in an ultraclean vacuum, because the 13.5-nm wavelength is absorbed by air.” “This Machine,” 2023.

As Jan van Schoot explained, EUV involves “hitting molten tin droplets in mid flight with a powerful CO2 laser. The laser vaporizes the tin into a plasma, emitting a spectrum of photonic energy. From this spectrum, the EUV optics harvest the required 13.5-nm wavelength and direct it through a series of mirrors before it is reflected off a patterned mask to project that pattern onto the wafer. And all of this must be done in an ultraclean vacuum, because the 13.5-nm wavelength is absorbed by air.” “This Machine,” 2023. -

Frank et al., “Intelligence,” 56, 53.