EPILOGUE

In 2034, a number of Earth’s most advanced simulations began to show a similar anomaly. As the world’s supercomputers ran scenarios of future coastline erosion, galactic mining schemes, and rapid urban redevelopment, an uncategorizable event increased noise in the system leading to an increased number of errors that cast their usefulness in doubt.

In time researchers determined that the error was not in fact a black swan event—not a solar flare or unsurpassable technical threshold—but something they had been expecting since the 2020s: the moment when the representational complexity of the system doing the modeling surpassed all the available data it was expected to model. Like Y2K or artificial general intelligence before it, this simulation point omega had been a focal point for speculation for decades. Would overfitting reality lead to epistemic back holes, a total disintegration of a ground truth with which to act?

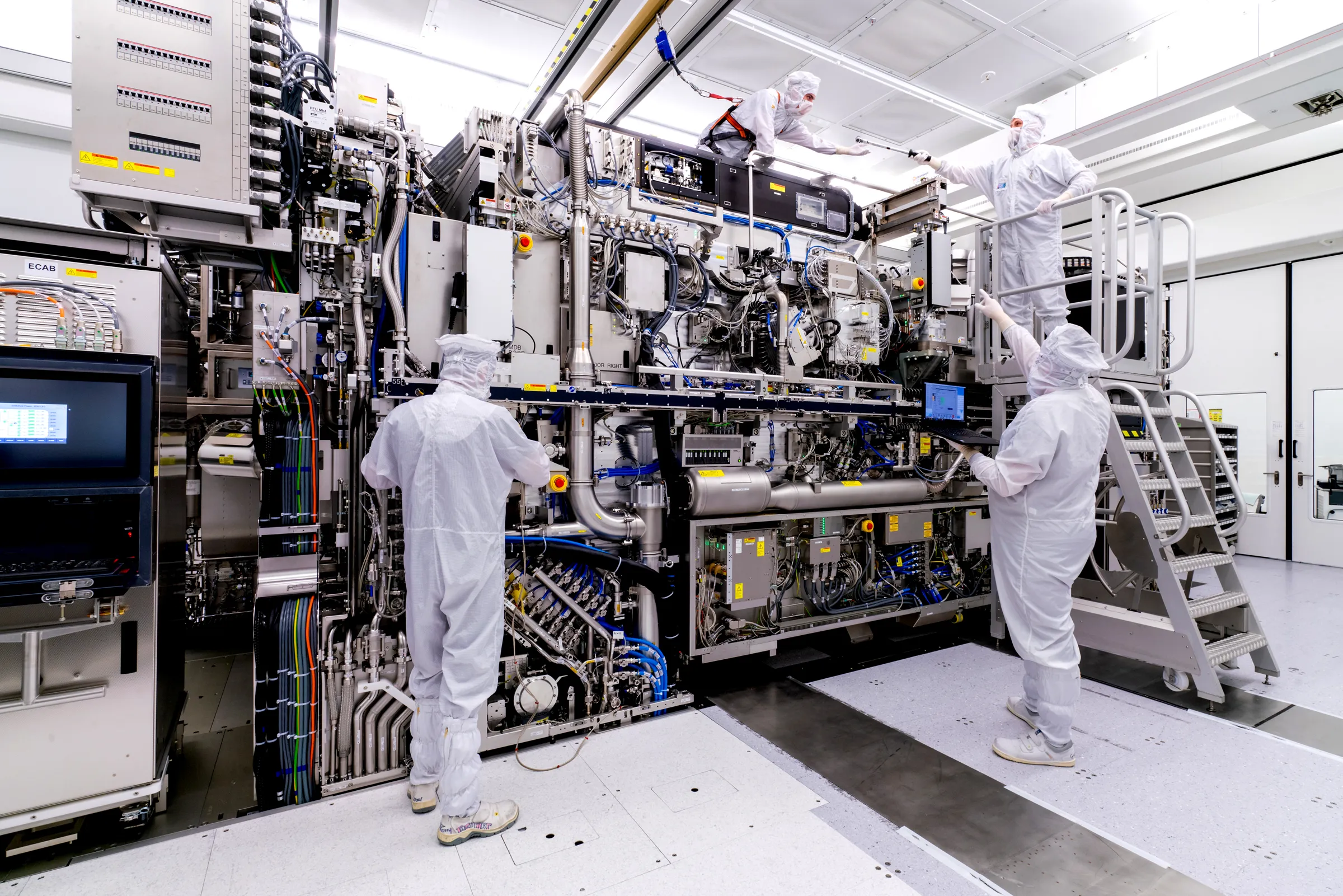

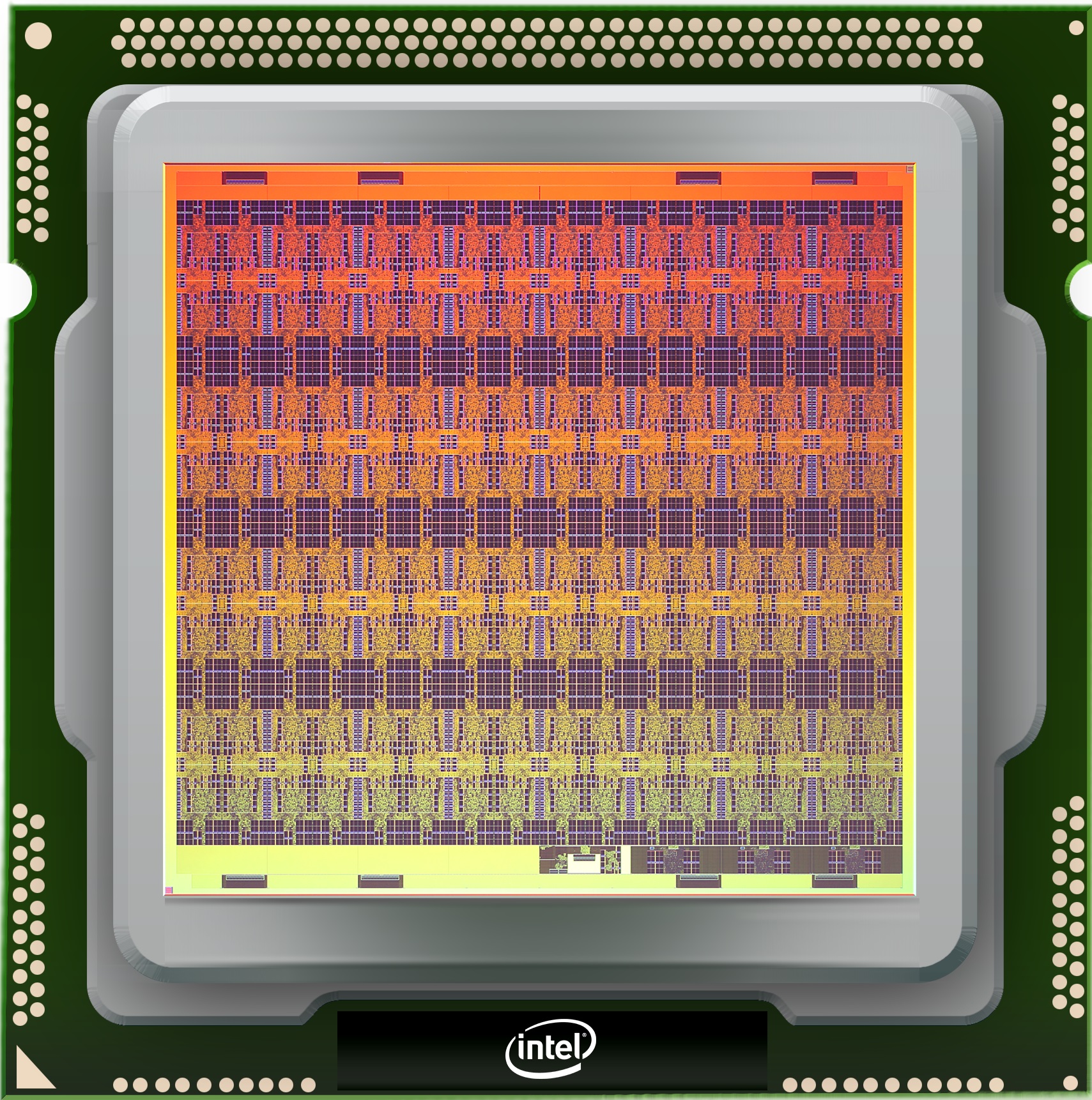

Despite the efficiency gains of unconventional computation, the energy use of information processing alone had reached a hundred quintillion (1020) joules of energy per year by 2040, compared with a hundred trillion (1014) joules just twenty years prior. Expecting that energy demand will continue to increase where it can, solar-powered satellite computers were launched into orbit around Earth in huge quantities. Once stable, they combined to form a federated entity known as the Infinity Mirror: a fleet of supercomputers that encircled Earth, a distant descendant of vacuum tubes, microprocessors, GPUs, and photonic cores.

Of the 90,000 terawatts of solar energy absorbed by Earth’s surface every year, a small quantity was caught in order to power the machine, a glassy overseer suspended at all times above the Arctic running the simulations integral to planetary intelligence, bouncing the rest into space. Computation had joined many other industrial activities—mining, material processing, toxic synthesis—off-planet, leaving the biosphere to bloom once more beneath its technological shell.

With the functionally limitless boost in computational capacity operating beyond the scope of anything possible on Earth, models of the galaxy began to predict fluctuations in the output of distant stars comparable to our own: technosignatures considered statistically incontrovertible, a suggestion of life at work. Though the distance between star systems remained, the predictions were considered contact of a sort. The discovery of life not as physical presence but mathematical certainty.

It remained unlikely that frail humans would ever reach those distant places to find out for sure. But the number of twinkling suns continued to increase the longer the simulation ran. Perhaps this was what it meant to be the first intelligence in a youthful universe, they thought, pained by their ongoing solitude but confident of things to come.